EvalOps: Effective Evaluation in a Changing AI Landscape

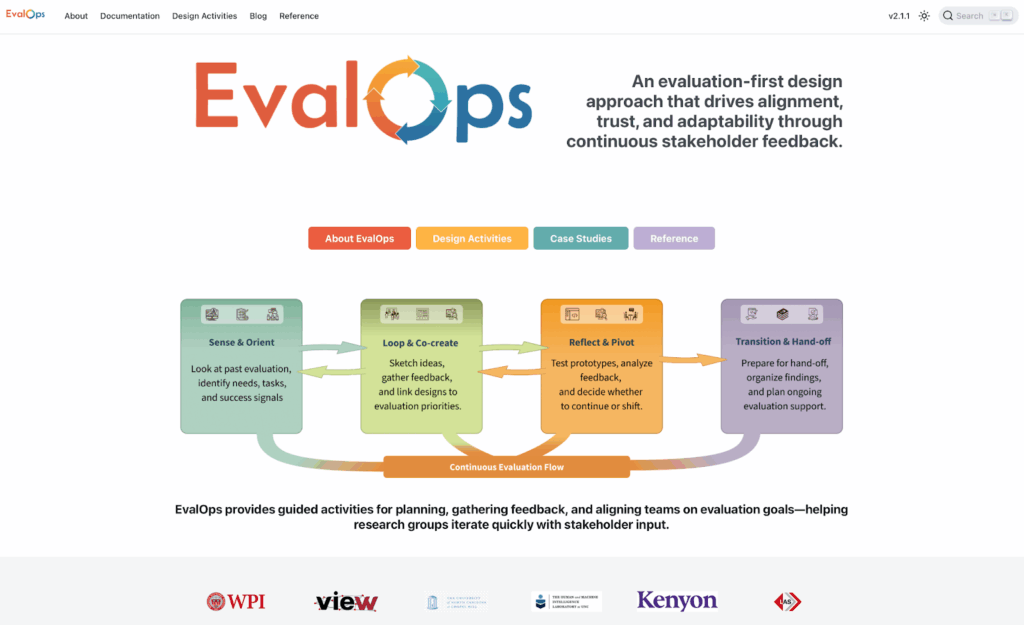

An evaluation-first design approach that drives alignment, trust, and adaptability through continuous stakeholder feedback.

Bijesh Shrestha1, Hilson Shrestha1, Karen Bonilla1, Noëlle Rakotndravony1, Mengtian Guo2, Yue (Ray) Wang2*, R. Jordan Crouser3*, Lane T. Harrison1*

Affiliations: 1 Worcester Polytechnic Institute, 2 UNC–Chapel Hill, 3 Kenyon College

*Principal Investigator

AI tools can produce impressive results in isolation, but analysts judge them in context. It is not enough for a system just to run. It has to connect to the tasks analysts actually do, support their decision making, and earn their trust through evidence. Models improve, interfaces shift, new features arrive, and yet the hard questions persist: Does it really help analysts do their work? How do we know if it supports the right tasks? What evidence do we have that it will work beyond a demo?

This gap between tools and outcomes is not surprising. Part of the challenge is that most development practices focus on the system itself. MLOps focuses mainly on models and pipelines, while analysts care just as much about usability, reliability, and alignment with their workflows. Evaluation often comes too late in the development process or happens as one-off studies that do not reflect what or who the tool was actually built for.

Bottom Line Up Front

- Keeps evaluation throughout the design lifecycle, not just at the end.

- Focus on cadence, roles, and metrics.

- Developed and tested through two analyst facing tools.

- Lightweight EvalOps design sheets teams can reuse across projects.

- EvalOps website for continuity beyond handoff: design sheets, resources, repos, walkthroughs, support materials.

Small loops reveal big problems early.

EvalOps Origins

During SCADS 2024, our team developed prototypes for exploring AI-generated document summaries. In describing the challenges, analysts were direct: AI summaries were not viewed as trustworthy, so means for additional inspection were necessary. Our team sketched ideas, made quick mockups, and implemented changes based on near-real-time feedback loops, allowing analyst stakeholders to provide rapid feedback. We realized that evaluation wasn’t slowing the design process down, but rather speeding it up. This collaborative approach, with analysts as integral partners in the creation process, was using evaluation as a driving force.

SCADS provided the right environment to act quickly once we saw these gaps. These cycles between creating tools for analysis and documenting the approach we used led to a design framework we call EvalOps: a methodology built to keep evaluation moving alongside design and development, even with tight timelines and shifting teams.

EvalOps in Practice

An evaluation-first approach makes evaluation part of the design and development process, clarifying when it happens, who participates, and how metrics support stakeholder adoption. In practice, that means planning regular evaluation loops, bringing in the right mix of analysts, designers, developers, and sponsors, and choosing a set of task- and domain-specific metrics that stay tied to real analytical work.

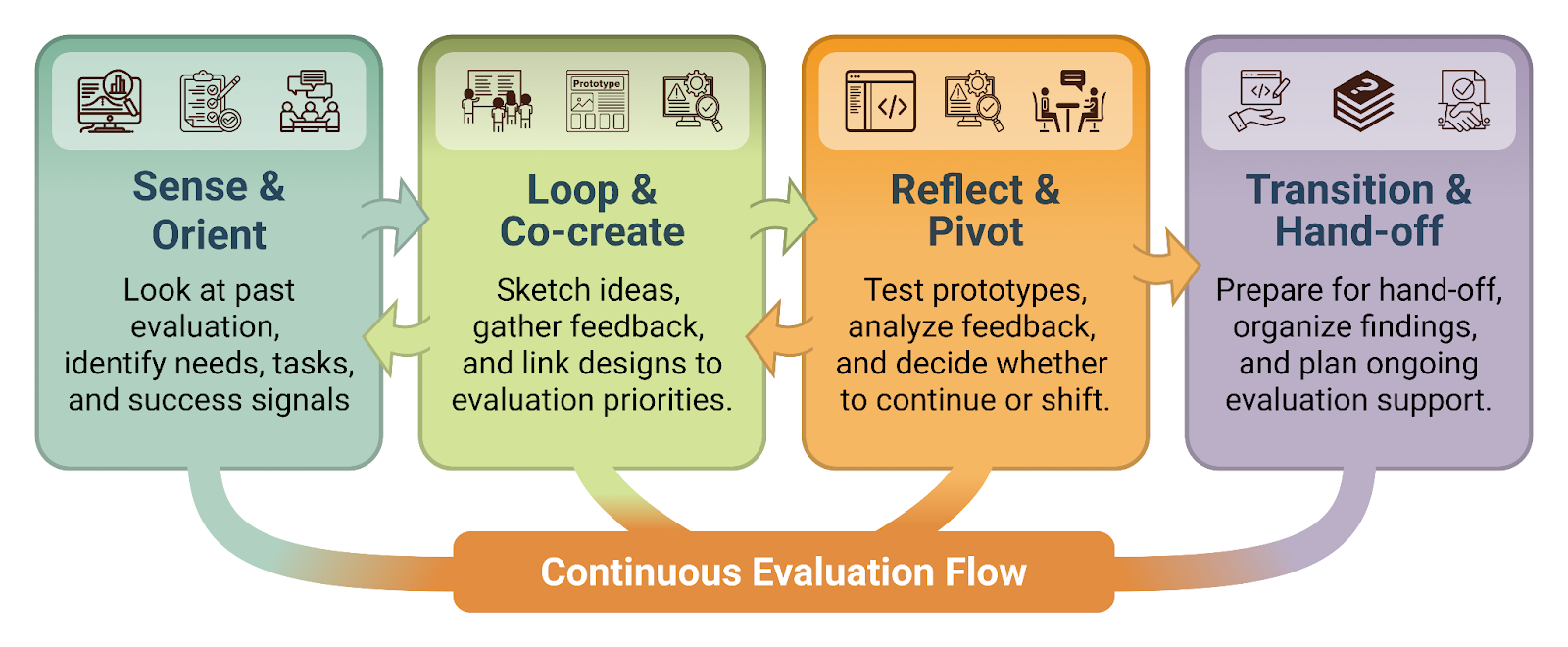

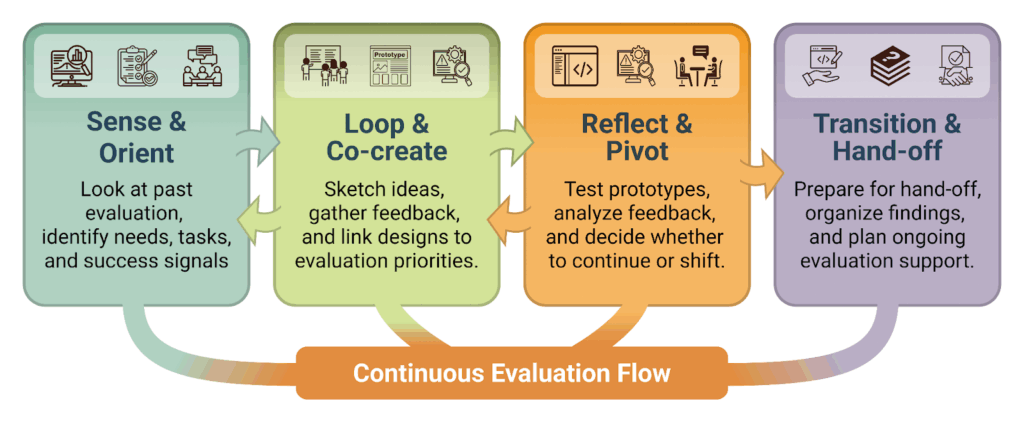

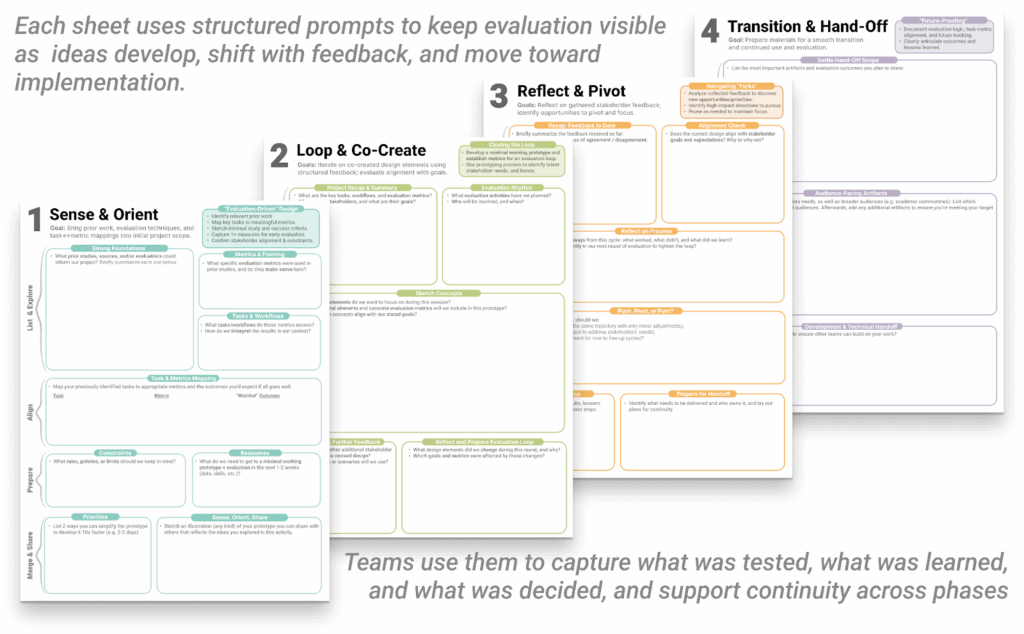

To make this evaluation-first approach usable, we shaped it into a simple workflow that teams can return to throughout the different phases of a project. The figure below shows the four activities that make up this flow. Each activity supports a different part of the process, and together, they help keep evaluation moving alongside the work.

Activity 1: Sense & Orient helps teams start from the same place by looking at past evaluation, identifying tasks and needs, and deciding what success should look like.

Activity 2: Loop & Co-create turns ideas into action through sketches, simple prototypes, and quick tests linked to evaluation priorities.

Activity 3: Reflect & Pivot creates space for a short pause to look at what the loops revealed, judge what is working, and decide whether to continue or shift.

Activity 4: Transition & Hand-off lays the groundwork for continuing EvalOps beyond the hand-off to the next team by organizing findings, documenting decisions, and planning ongoing evaluation support.

From Guiding Sheets to Design Sheets

As we refined EvalOps, we dug into the design and HCI literature for guidance on approaches that are well-established and trusted by experts. Frameworks and models like the Design Study Methodology, Data First, the Design Activity Framework, together with the Analyst Hierarchy of Needs, helped us think clearly about tasks, stakeholders, and decision-making. They gave us structure, but they also highlighted an area of need: none of them fully described how to keep evaluation active while the design and development work is moving quickly.

We created a set of guiding sheets to use in our own work as part of the design process. Each loop showed us what helped and what did not. Over time, those early guiding sheets grew into four lightweight activity sheets that map directly to the EvalOps workflow we had defined. These sheets were created to surface evaluation needs and outcomes throughout the design process, not after it, and to give any practitioner a reusable structure they can follow or adapt.

We then piloted these sheets with SCADS teams. Their feedback helped us simplify the wording, sharpen the prompts, and remove anything that added friction. Teams noted that the sheets helped them focus, structure conversations around metrics, and plan around closing loops with more clarity. These four sheets are now at the core of EvalOps design methodology. They give teams a practical way to plan evaluation, track evidence of success or the need for more iteration, and support continuity in a process that moves quickly and changes hands often.

How EvalOps Shapes Tools

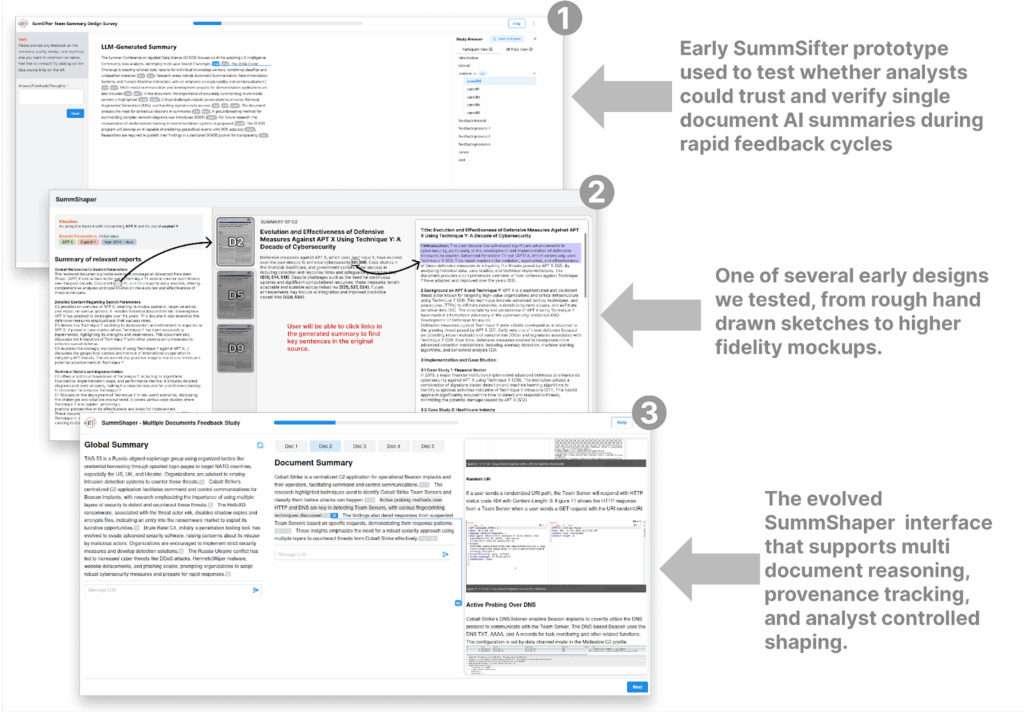

1. SummSifter to SummShaper: SummSifter was our early prototype for working with AI generated summaries. It showed a single document summary and let analysts check it against the source. Analysts were clear about what they wanted, and they told us directly. Those early evaluation loops revealed needs that we might otherwise have found too late:

- Analysts wanted to verify summaries, not just read them.

- They wanted control, not a black box output.

- They wanted multi-document reasoning, not a single summary prototype.

To keep momentum, we used everything from hand drawn sketches to higher fidelity mockups to explore new ideas quickly. That work eventually led to SummShaper, a tool that helps analysts compare multiple documents, trace statements to their sources, and shape summaries to fit real reporting tasks. SummShaper became a clear example of how EvalOps helped us pivot early and build toward what analysts actually needed. This work took place during SCADS 2024 and directly inspired the 2025 EvalOps project. Read the 2024 SCADS technical report.

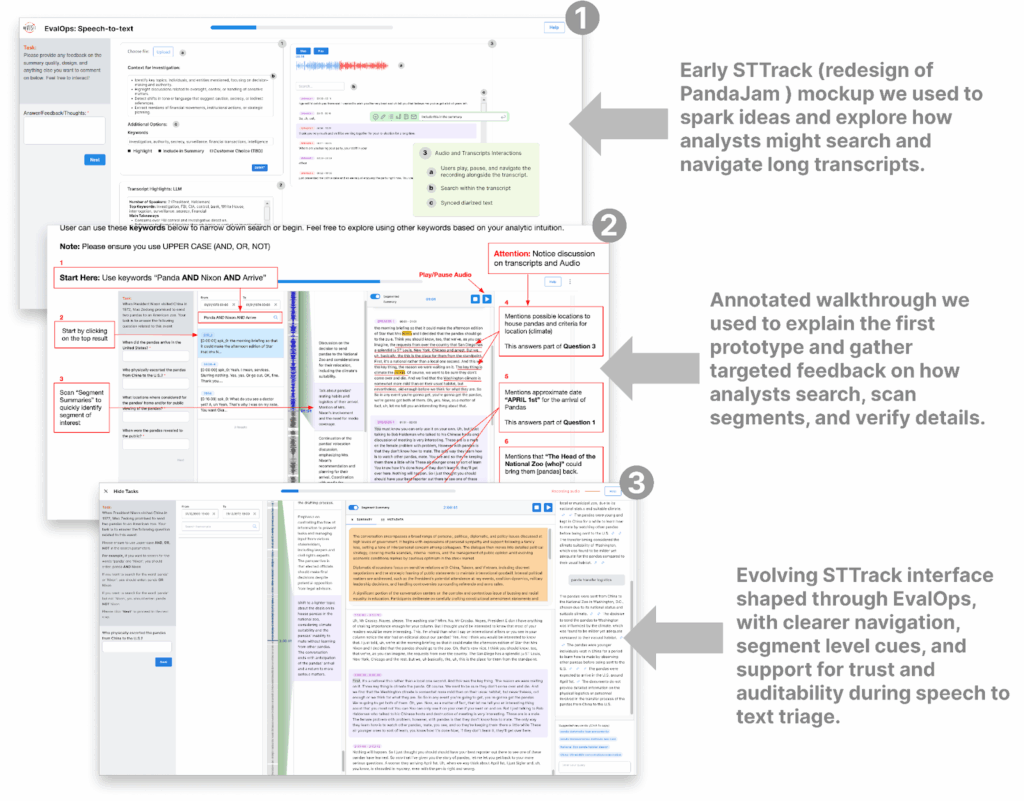

2. STTrack: STTrack, inspired by the PandaJam effort that focused on tools to assist analyst review of long, noisy speech-to-text transcripts. Analysts used it to search, scan segments, and verify details across hours of audio. We selected it as a redesign project to test and validate the EvalOps framework on a mature system and to explore where visualization, interaction, and AI support could help. Early walkthroughs showed clear opportunities for improving navigation and helping analysts work through transcripts more efficiently. We used annotated walkthroughs and targeted stress tests to explore how people moved through transcripts, spotted key segments, and checked claims. As we ran multiple feedback sessions, the prototype evolved into a more refined tool. These fast cycles showed us which features supported real analytic work and which needed to change, making STTrack an example of how EvalOps keeps evaluation close to design, even when working with an existing system. A detailed report is pending publication.

During our work on STTrack, the EvalOps methodology and our partnership with analysts were tested in a real and unexpected way. We had planned a final round of analyst testing to evaluate the next version of the tool with end users and stakeholders. But the government shutdown meant that analysts could not participate during this phase.

Early loops had given us a grounding in the tasks, metrics, and trust questions that mattered to the stakeholders. To stress-test the tool responsibly, we brought in academics who had never seen the system before and asked them to test it using a set of task-grounded criteria based on what we documented from working with analysts. Their job was to push the system from a UI/UX lens and from a trust/audit lens.

Takeaway is simple:

- Plans change, and access to collaborators shifts.

- EvalOps cannot prevent that, but it offers structure and agility to adapt with rigor.

- When tasks, metrics, and priorities are clear, evaluation can keep moving even when conditions are not optimal for this.

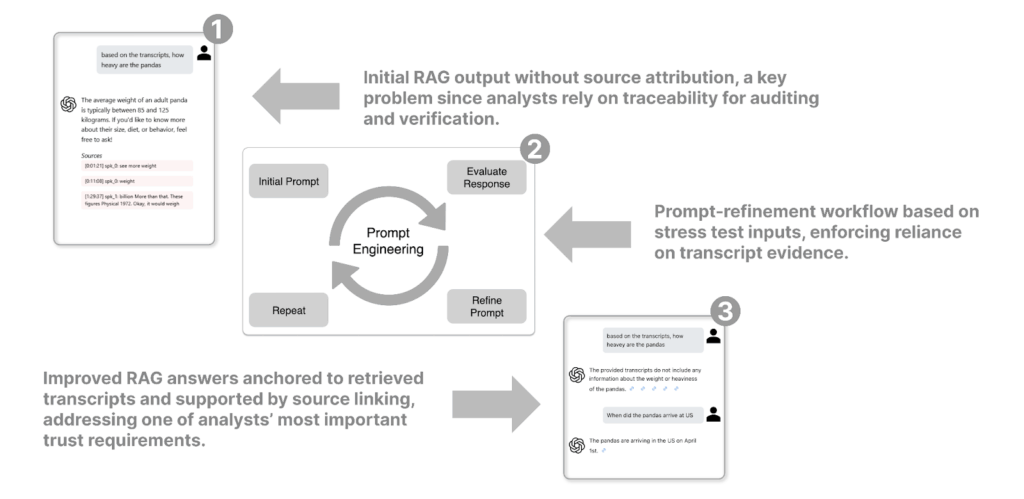

They didn’t just record bugs – they documented interaction sequences that allow tracing issues back to specific design and backend decisions. For example, one issue discovered was that the RAG system occasionally pulled in knowledge that wasn’t present in the transcript, even when contextual grounding should have constrained the response. That insight reshaped the next iteration of the backend. We improved prompts to LLM to enforce stronger reliance on retrieved transcripts. The result was a more transparent, verifiable interaction that matched the needs analysts had expressed earlier.

From this experience, we learned that EvalOps is useful not only for surfacing feedback from collaborators, but also for documenting these inputs and applying them in systematic ways to solve for unexpected roadblocks.

EvalOps for All: the EvalOps Website

From the start, EvalOps encouraged us to think about what happens after a project ends, not just what happens while we are building it. Projects rarely stay with one group for long. Teams rotate, developers shift to new efforts, stakeholders change roles, students graduate, and tools evolve. That is why the EvalOps website became one of our core deliverables for sharing and continuing the work, including a repository that teams can clone and adapt as needed.

The website includes the activity sheets, complete case studies with their repository, study resources, templates, and other details that help teams get started quickly. It also provides repositories that can be cloned for running studies, along with lessons learned and patterns we found helpful in earlier work.

The goal is not only to preserve materials, but to support the work that follows. We also planned for the Intelligence Community’s constraints. The site can be mirrored or packaged for federated or high side networks, so teams can use EvalOps even without open internet access.

Acknowledgements

This work was supported in part by NSF Award Number 2213757. We also thank our collaborators, analyst partners, and the broader SCADS and LAS communities for their time, feedback, and insight throughout the development of EvalOps.

This material is based upon work done, in whole or in part, in coordination with the Department of Defense (DoD). Any opinions, findings, conclusions, or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the DoD and/or any agency or entity of the United States Government.

- Categories: