Tearing Is Sharing: Tearlines Summarize Classified Reports for Broad Readership

Amazon Web Services and LAS are using unclassified data to simulate tearlines so intelligence documents can be published for a wider audience.

For an intelligence report to be useful, it’s essential that the report can be shared with the right customers. This can be very challenging in real-world environments, as customers don’t always have access to the same data as analysts. So how can an analyst make a report widely available when the report may be highly classified or controlled? In theory, the answer’s simple – just write a “tearline,” or a summary of the report that provides the same substance, but isn’t as controlled. With this less controlled summary, an analyst can share the key findings from their report with a wider audience. According to Intelligence Community Directive 209, a tearline should “render facts and judgments consistent with the original reports” and be “written for the broadest possible readership” but at the same time not identify “sensitive sources, methods, or other operational information.” That means, in practice, writing a tearline can be a slow, inconsistent, and very manual process.

Luckily, this is the exact kind of problem that recent advancements in machine learning – including, yes, large language models may be able to help with. This year, the Laboratory for Analytic Sciences (LAS), in collaboration with Amazon Web Services (AWS), is looking into this exact problem – how can authors write tearlines more quickly, consistently, and in a way they can trust?

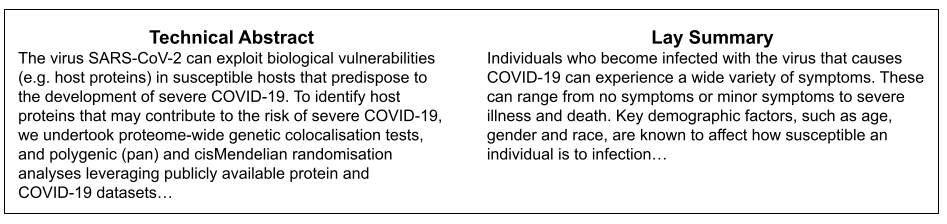

To do this, the team first needed unclassified data. The content of real intelligence reports and tearlines would generally be classified, so the LAS team searched for an unclassified proxy dataset. They found it in the form of the “Lay Summary” dataset. Two journals for biomedical research, PLOS and eLife, provide “lay summaries” of their articles. That is, on top of the usually complex and hard-to-interpret scientific abstracts, the journals also include more accessible summaries that a broader audience can easily understand. With this dataset, a wide set of authors are writing highly technical (a proxy for highly classified) articles, alongside lay summaries (a proxy for tearlines) written by the authors themselves or by expert editors.

With a problem and a dataset, the team began to consider a wide range of possible tools they could use to support writing tearlines. These include using massive, off-the-shelf large language models (LLMs) hosted by third parties (think GPT-4 or Claude 3), smaller open-source LLMs like Mistral that can be self-hosted, or a fine-tuned model. For any of those options, researchers could run the models with a simple prompt, include some static rules with the prompt, or try to find the best rules for the model to use to write each tearline. Giving a model precise rules to follow might produce better results, but finding the right rules to apply to a given document is challenging. Also, the rules could be derived directly from official guidance – there’s no shortage of publicly available Department of Defense guidelines to test models on – or they could be generated from proxy article-and-tearline pairs.

These are the problems the LAS/AWS team is investigating this year. The team is establishing automated evaluation methods to compare different approaches, investigating retrieval augmented generation (RAG) pipelines for finding the right rules, and comparing a wide range of model sizes and architectures. This includes automatically finding rules for an LLM to follow by generating them from pairs of highly technical abstracts and lay summaries. Using these automatically generated rules, the team is comparing various off-the-shelf LLMs for actually generating lay summaries from technical documents, and creating metrics to evaluate the lay summaries generated by the models. Early results for generating rules from document pairs, clustering these rules, and making use of LLMs to consolidate these rules have been especially promising.

On top of supporting tearline writing directly, LAS is also designing the project so that each piece of this process can be independently useful. For example, the tools developed to evaluate tearlines may be useful on their own in providing classification suggestions and explanations, and any system that could find the right rules for a specific situation could be helpful in contexts well outside of the tearlines problem.

Results from this collaboration with AWS and other LAS research projects will be shared at the end-of-year LAS Research Symposium in December.

About LAS

LAS is a partnership between the intelligence community and NC State that develops innovative technology and tradecraft to help solve mission-relevant problems. Founded in 2013 by the National Security Agency and NC State, each year LAS brings together collaborators from three sectors – industry, academia and government – to conduct research that has a direct impact on national security.

- Categories: