What is really going on here? Abductive reasoning in intelligence analysis

Bolstering human machine teaming with support for narrative abduction

Tim van Gelder, Director, Hunt Lab, University of Melbourne

Note: This post represents one perspective on the project. Some project team members would disagree with some points made.

In late August, the Russian naval air base at Saki in Crimea lit up with a series of massive explosions. The destruction included hangars and eight fighter aircraft on the ground. The Russians said there was an accident. The Ukrainians claimed it was sabotage, but that would have been very hard to bring off…

Reading this, you might have been thinking something along these lines:

This seems like a serious blow to the Russians in the midst of their war against Ukraine. Presumably the Ukrainians were behind it. You can’t trust what the Russians say about it. But how did the Ukrainians manage it? If it wasn’t a special forces operation, perhaps they launched a missile attack. Do they have missiles that could have done the job? How far is Saki from Ukrainian territory? …

In other words, you might have been trying to figure out: what is the real story here?

This kind of thinking is called narrative abduction. Abduction is reasoning based on the principle that a true account will be able to explain the known features of a situation. It is widely regarded as one of the three main kinds of reasoning, alongside deduction and induction. Narrative abduction is where the candidate explanations are narratives – in the most basic sense, sets of events where some events lead to other events. It is trying to find the causal story which best explains what is known about a situation, and thus is most likely to be true.

Narrative abduction is very common.

Human brains have evolved to be “wired for story” as a way of making sense of our situations, and as entertainment. Not surprisingly, it is also something intelligence analysts do a lot. One eminent authority, Gregory Treverton, says “intelligence is ultimately about telling stories.” However, until recently, little research attention had been given to narrative abduction, and how it might be done better.

In intelligence, complex “what is going on here?” problems – e.g., what is going on in Iraq with WMDs? -are tackled by teams of analysts. Their collaboration is mediated by information technologies. Yet, because narrative abduction had been in a blind spot, we don’t yet have technologies tailored specifically to team-based narrative abduction.

That was our point of departure. How might a software platform help teams collaborate more effectively, so they can arrive at the best explanatory story more reliably, and perhaps even get there more efficiently? And more ambitiously, what aspects of that analytic work might the platform be able to take on, becoming in effect part of the team, not just a support for it?

This is an ergonomic question; it is asking how the analysts’ environment should be configured to enhance their performance. To develop and test a potential answer, we needed what we call a prescriptive theory of abduction, consisting of guidelines for how analysts should go about reasoning so as get the best results given all the constraints and challenges involved in real analytic work. But a good prescriptive theory would have to be informed by two other kinds of theories. One is a normative theory, which would tell us how narrative abduction would be done by an ideally-situated rational agent. The other is a descriptive theory, which tells us how human analysts actually do reason abductively, given the cognitive machinery evolution endowed them with.

Unfortunately, none of these theories existed, at least not packaged up in a clear and agreed form.

We had to work on all four fronts more-or-less simultaneously, within the challenging time-frame of a one-year project.

We chose to work within the design science research paradigm. This is research aimed at building an artefact of some kind (e.g., a software platform), and which both draws on and contributes to scientific knowledge. Design science research involves repeated design-build-evaluate cycles, in which tentative theories can be rapidly implemented and assessed.

We started work on our first design-build-evaluate loop with a nascent prescriptive theory based on ideas from philosophy of science. In that discipline it is generally thought that scientists decide between rival theories by evaluating theories in terms of generic “explanatory virtues,” such as explanatory power, internal consistency, and simplicity. Some scientific theories take narrative form. So we conjectured that intelligence analysts should assess their explanatory narratives in much the same way.

We developed this idea into a prescriptive theory expressed in a method for narrative abduction, which we called the Analysis of Competing Narratives (ACN) – making a clear acronymic nod to the well-known structured analytic technique, the Analysis of Competing Hypotheses (ACH).

In parallel, we developed a prototype software environment (“app”) to support an analyst team applying the ACN. The app was based around analysts individually rating candidate narratives on a set of three virtues (narrative development, explanatory power, and substantiation). The app aggregated the analysts’ judgements in “wisdom of crowds” fashion into a collective assessment of the plausibility of each narrative. To maximize agility and flexibility, the app was rapidly assembled on Knack, a commercial cloud environment for developing no/low code apps.

We conducted an evaluation exercise of the ACN method and app with a small team of analysts from the LAS community, participating as research colleagues rather than human subjects. The team tackled a realistic problem where the challenge was to explain why North Korea tested a missile when it did. The exercise was hampered by some technical issues, but still yielded insights into the merits of, and problems with, the ACN approach.

One key problem was that the approach asked analysts to make too many distinct judgements (e.g., “How strong is narrative A on narrative development?”) of a kind they wouldn’t normally make and which seemed quite difficult to make.

Another problem was that the team gravitated towards one corner where they could rate candidate narratives for explanatory power with regard to individual items of evidence. This was tantamount to applying the Analysis of Competing Hypotheses, which was a concern in two ways. First, it would mean approach was reinventing the wheel; and second, that wheel is broken anyway. (In a separate part of the project, we reviewed all research on the utility of ACH; the overall verdict is damning.)

We were at a fork in the road.

We do more (re)design-build-evaluate loops on the ACN approach. Or, we could try coming at it from a different angle. Arguably, the fundamental problem with the ACN was that it started from an external, normative perspective how analysts should (in theory) assess explanatory narratives. What if we started instead at the descriptive end – i.e. with what proficient analysts actually do?

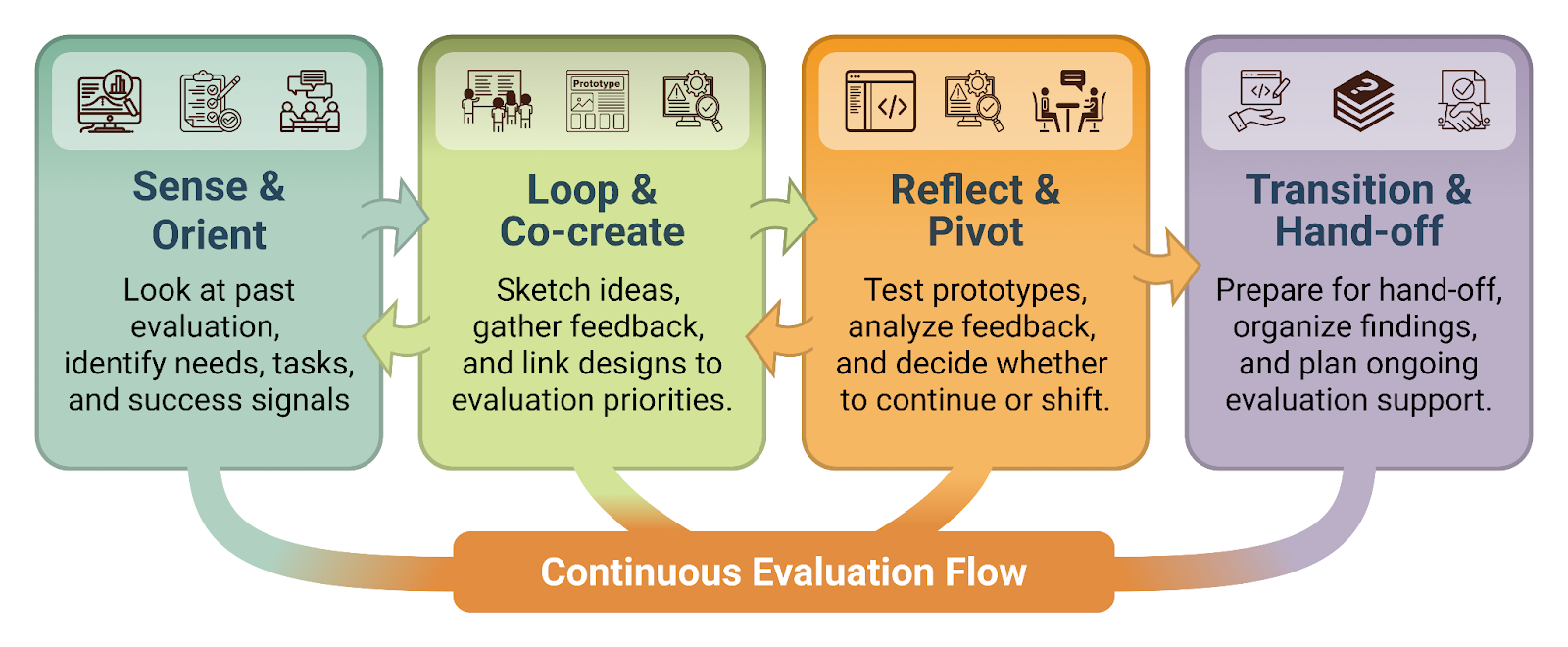

We had already started getting insights into this by observing analysts in ACN evaluation exercises, getting their feedback, and listening to their spontaneous reflections on how they would normally go about developing and assessing explanatory narratives. There had also been prior scientific research in the cognitive science of intelligence analysis, focusing on how analysts make sense of complex situations. As it turned out, much of this research was describing analysts engaged in abductive reasoning. It also seemed to cohere well with what we were learning in our evaluation exercises. Based on this descriptive knowledge base, we forged a new prescriptive theory, also expressed in a method, the Evaluation of Explanatory Narratives (EEN). The new approach was designed around the observation that analysts generally attempt to find the best explanatory narrative by identifying and seeking to resolve problems with candidate narratives. They have various strategies for resolving problems including searching for new evidence, challenging parts of the evidence, or modifying the narrative. Narratives with too many intractable problems are rejected. Through this process, still-viable narratives are gradually elaborated and strengthened, and analysts intuitively and holistically develop a sense of how promising or plausible each narrative is.

Outline of the Evaluation of Explanatory Narratives method for narrative abduction. See here for a larger version.

The EEN renders this observed activity into an explicit procedure, albeit one that is full of loops and allows for indefinite iteration. The idea of explanatory virtues survives, but with the virtues now functioning as perspectives from which problems with candidate narratives might be identified. What kind of software environment might facilitate an analyst team’s use of this method? Put differently, what is the ergonomic theory corresponding to this new prescriptive theory? Continuing with the design science approach, we needed a new app. We thought that the core functionality of this app should be support for the team to quickly and easily create and modify shared lists, such as a list of key information about the situation, a shortlist of viable explanatory narratives, a list of the apparent problems with that narrative, and so on. This kind of functionality was already provided by another commercial platform, Trello, so we quickly configured a workspace to support the EEN, improving usability using Unito to automate some tasks.

Screenshot of the Trello-based prototype app intended to support a team of analysts to use the EEN method by allowing the rapid creation and modification of shared lists.

Our project schedule allowed for one relatively informal evaluation exercise. Not surprisingly, this first prototype had some limitations, but overall the approach seemed much more promising.

So, late in the project, where have we arrived?

First, we’ve got a deeper appreciation of the complexity of narrative abduction as a cognitive/epistemic process, and of the intuitive expertise of experienced analysts.

Second, more than technical solutions, our progress has been in clarifying the nature of the challenge, and developing foundations for future work in this area. Our final report contains extensive discussion of normative, descriptive, and prescriptive dimensions.

Third, we can be reasonably confident that the ACN approach (i.e. designing software support for analyst teams around the idea of rating narratives on some set of explanatory virtues), is not the right way to go, however plausible it seemed at the outset. As Thomas Edison might have said, that’s progress.

Finally, throughout this project we grappled with the problem: how can complex explanatory narratives, and their relationships to a complex body of evidence, be represented so as to facilitate shared understanding among team members? Standard text? Tables or lists? These familiar options are easy to implement but quickly show their limitations. A major advance in supporting analyst teams might come from developing ways to visualize narrative structure. A collaborative project in 2023 will be exploring this territory.

Note: This material is based upon work supported in whole or in part with funding from the Laboratory for Analytic Sciences (LAS). Any opinions, findings, conclusions, or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the LAS and/or any agency or entity of the United States Government.

- Categories: