EYERECKON: Train Computer Vision Models to Detect Rare/Uncommon Objects

Felecia Morgan-Lopez, James T. Smith III, Alfred Jarmon, David Wall, Dr. Sambit Bhattacharya (Fayetteville State University Computer Science Professor) and FSU Senior Design Teams

The Problem

The Chinese-manufactured Closed-Circuit Televisions (CCTVs), such as those made by Hikvision and Huawei, pose a risk to US National Security which is why US Congress and President Biden joined forces to pass the Secure Equipment Act in November 2021.

Training computer vision models to detect these types of rare/uncommon objects can be challenging. Rare/uncommon objects in this context refer to objects that are underrepresented or not represented in the dataset. In publicly and freely available datasets, Chinese CCTVs are rarely, if ever, represented. Further, in amassing publicly available datasets composed of Chinese-manufactured CCTV images to train computer vision models, the Laboratory for Analytic Sciences (LAS) immediately noted (1) the variety of current and past CCTVs were scattered throughout the internet and were not contained in a centralizeddata repository such as MS CoCo; (2)there are limited-to-no pre-trained labeled datasets for Chinese manufactured CCTVs; (3) the overall lack of images depicting specific manufactures of the CCTVs. Therefore, not having enough of the ‘right’ training data can make computer vision models difficult to detect these rare/uncommon objects. This could ultimately lead the models to misidentify Chinese-manufactured CCTVs as some other object to the potential detriment of NSA’s security measures.

The Academic Partnership

In the Fall of 2021, a partnership was formed between the LAS and the Computer Science Department at Fayetteville State University (FSU) to assist with developing a method to detect rare/uncommon objects in images. Located 15 miles from the Fort Bragg military base, FSU is a state-funded Historically Black College and University (HBCU) with a large military student population. Approximately 25% of FSU students are either active duty, veterans or have prior military experience.

The intent of the LAS and FSU partnership is to develop and leverage joint expertise in machine learning computer vision capabilities. This partnership was formed through NSA’s existing Educational Partnership Agreement (EPA) with the University of North Carolina (UNC) school system. Further, the LAS is seizing the opportunity to foster a continued relationship to address critical NSA challenges in support of NSA’s research, academic, and diversity priorities. The collaboration also provides an opportunity to recruit and possibly hire FSU students, especially those with military backgrounds, DoD experience, and/or clearances.

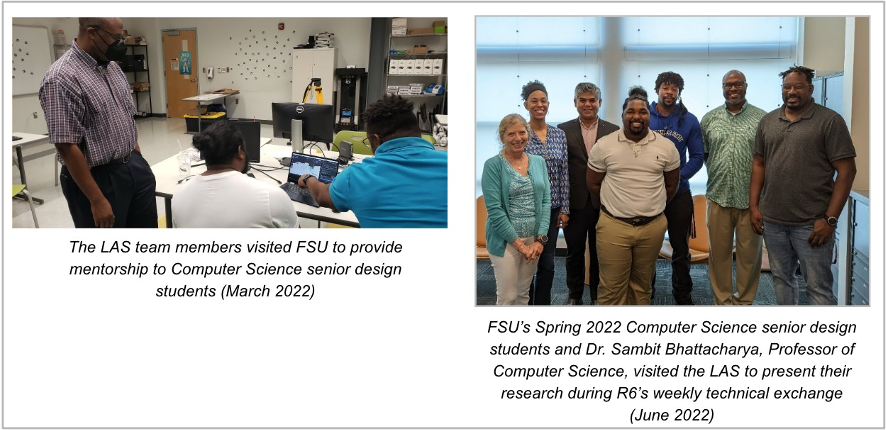

In collaboration with Dr. Sambit Bhattacharya, Professor of Computer Science at FSU, and the Computer Science Senior Design Spring & Fall 2022 courses, the LAS team researched a concept to devise the first phase of a rendering engine that leverages Cycle Generative Adversarial Networks (GANs) to generate synthetic data on rare/uncommon objects to improve the robustness of computer vision models for object detection. As an example of the collaboration with FSU, the LAS team devised a use case to synthetically generate Chinese-manufactured CCTVs, which are deployed worldwide, to include being a part of the infrastructure in China’s Belt and Road Initiative throughout the Middle East.

The Research

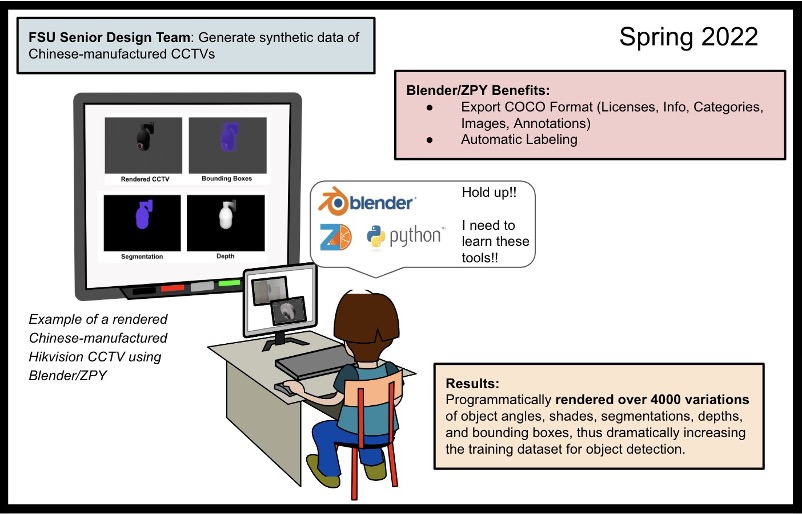

The LAS team and Dr. Bhattacharya mentored the FSU Spring 2022 senior design students, where the objective was to synthetically generate Chinese-manufactured CCTVs. Using the open-source graphics software Blender, the students rendered a total of four Huawei and Hikvision CCTVs. To render data at scale, a plug-in for Blender called ZPY (created by ZumoLabs) allowed the students to programmatically render over 4000 variations of object angles, shades, segmentations, depth, and bounding boxes in the COCO format.

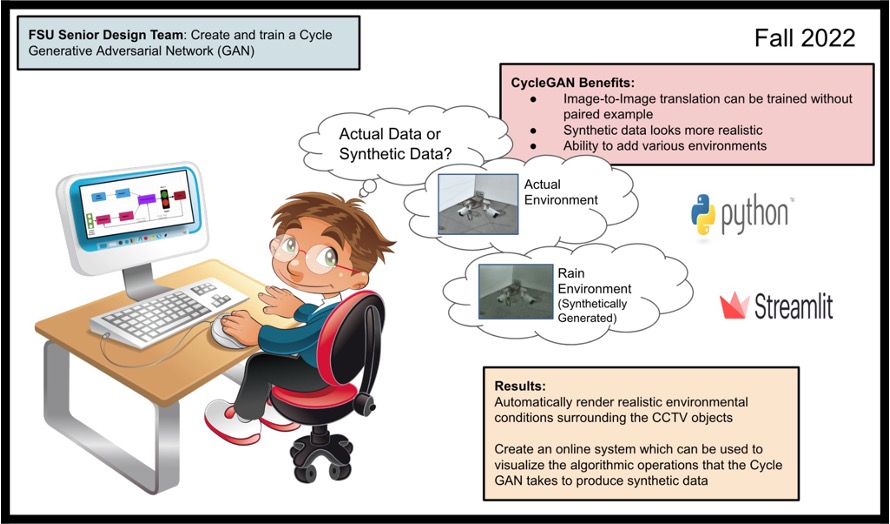

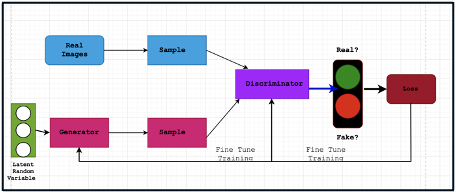

The LAS team continued their research by partnering with the FSU Fall 2022 senior design students along with Dr. Bhattacharya as the co-advisor leveraging Cycle GANs. The benefits of using CycleGANs are (1) image-to-image translation can be trained without paired examples (2) synthetic data looks more realistic and (3) the ability to add various environments.

The students were divided into two teams:

Team 1:

Created & trained a CycleGAN, which takes a limited number of Chinese-manufactured CCTV images(called “the input set”) where the set has some (a) images of physically real Chinese-manufactured CCTVs and some (b) images of graphically rendered Chinese-manufactured CCTVs. The synthetic images shown in Figure 2 (below) is an example of the CycleGAN output which shows the realistic environmental condition (e.g., rain) surrounding the Chinese-manufactured CCTV objects.

Team 2:

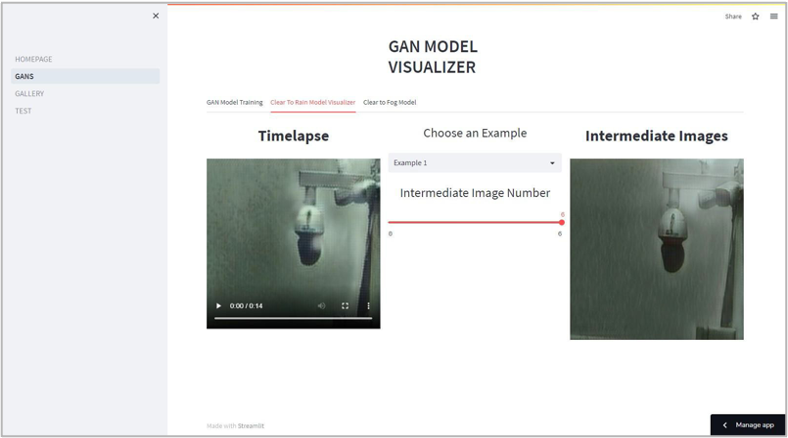

Created a front-end user interface (web application) to demonstrate the CycleGAN process of given data. The interface displays (1) the input data used by the CycleGANs, (2) the CycleGAN results, and (3) the metrics about the CycleGAN process such as the amount of time it took the CycleGAN to process. As seen in Figures 3 and 4 (below), the web application shows the CycleGAN process of rendering rain environments around the CCTV object. The user can move the sliding scale to see the rendering process by the CycleGAN.Fog environments were also rendered using the same methodology.

To demonstrate the outcome of the first phase rendering engine, the FSU Spring 2022 senior design students and Dr. Bhattacharya were invited to the LAS to present their research on synthetic data generation to over 100 NSA attendees during the R6 weekly technical exchange. Additionally, the LAS team invited the Fall 2022 FSU students to their annual symposium to present the results of their research onGANs. Both of these avenues gave the senior design students the opportunity to showcase their talents on AI/ML, critical thinking, and applying advanced technological approaches to problem-solving the development of a capability of immediate need to NSA.

Long-term Agreement

As a result of the collaboration between LAS and the FSU senior design class, the LAS team worked with the Office of Research and Technology Applications (ORTA) and FSU leadership to get the 5th MSI Cooperative Research and Development Agreement (CRADA) officially established as of May 2022. FSU will now have the opportunity to partner with NSA on research and development topics such as Internet of Things, Cyber Security, and Secure Composition and System Science.

Additionally, establishing the 5th MSI CRADA with FSU has allowed the students to participate in the semi-annual MSI CRADA Showcase hosted by ORTA. The showcase began in Spring of 2022 and is continuing for Fall of 2022. The goal is to give students from institutions that have a MSI CRADA with NSA to work on research topics such as Quantum Computing, Trustworthy AI, Geospatial Computer Vision and AI Integration. For eight weeks, mentorship and guidance from NSA and industry elements were given while the students worked on their research topics. The students will then present their work to a larger audience from NSA, academia and industry by giving a 10-15 minute presentation about the problem and solution. This is another opportunity for the students to showcase their talents to various leaders within the government, industry, and academia, which could potentially lead to scholarships and job offers.

Success Story (Mutual Benefits)

The LAS/FSU partnership exposed the senior design students to real-world mission challenges, which allowed them to become interested in continuing collaboration and/or employment opportunities with NSA.

One student continued working with the LAS as a summer (2022) intern to research ways to automate synthetic data generation of rare objects using GANs.

Another student applied to the Computer Science Development Program (CDP) and multiple NSA technical positions.

Two students applied to the NSA summer internship Computer Science program.

One student applied for a summer internship vacancy in Language and Analysis (Mandarin).

Additionally, the senior design projects provided the foundation for further collaboration between FSU and LAS. As such, FSU applied and was selected as a 2023 LAS performer continuing its research in computer vision.

Finally, the LAS learned about FSU developing a Cybersecurity undergraduate curriculum. Therefore, the LAS provided information about the Center of Academic Excellence in Cybersecurity (CAE-C) application process so FSU will have the opportunity to partner with NSA in the future on Cyber Defense, Cyber Research and Cyber Operations.

Next Steps (FY23)

The LAS will continue its partnership with FSU in 2023 as a LAS performer.

Potential research topics for next year include:

- Combining synthetically generating CCTV variations (shape, size, location & pose) with different environmental conditions (rain, snow, sandstorm, etc.) by leveraging CycleGAN to increase training data.

- Test and select the optimal graphics software tools and rendering approaches at scale.

- Identify cost-efficient alternatives to GPU processing to train the CycleGAN.

- Improve computer vision models by testing against the CycleGAN outputs.

Contact Info

- Felecia Morgan-Lopez (fdvega@ncsu.edu)

- James Smith (jtsmit23@ncsu.edu)

- David Wall (dawall2@ncsu.edu)

- Alfred Jarmon (ajarmon@ncsu.edu)

- Categories: