Personalize User Workflow and Improve Technological Engagement

Krishna Marripati, Dr. Ana Maria Staicu, Dr. Bill Rand

North Carolina State University

This project focuses on the “Insider Threat” evidence-finding game, developed by a team at the University of Kentucky with LAS funding. Christine Brugh was involved in this project. In the game, intelligence analysts investigate a fictional cybercrime at a startup using tools like database queries, web searches, deleted document recovery, and visualization tools (timeline, table, map). Analysts explore documents like email, calendar entry, network log, information, then judge employees as guilty or innocent based on their findings. The analysts’ interactions are captured in activity logs, detailing tool use, document type (e.g., email, calendar entry), actions (e.g. save, assign, read), and reasoning. Our analysis has two goals:

- identifying main strategies for solving the game, and

- developing recommenders to help analysts select optimal tools and document types.

Two scenarios were investigated: “michelle-cargo” (20 analysts) and “ivan-karikampani” (5 analysts). Each analyst is identified by a unique User ID (0–24). Key information about each analyst includes:

- Education Level: Recorded as “Bachelor or Graduate Degree.”

- Training: Indicates prior training, recorded as “Training” or “No Training.”

- Age: Categorized as “30 or younger,” “31-49,” and “50 or older.”

- Analyst Type: Summarized as either Intelligence Analyst (IA) or Other Analyst (OtherA).

We began with an exploratory analysis, revealing that 21 out of 25 analysts identified the correct criminal. The top-left heat map plot summarizes demographic data and its relationship to correctly solving the game. We then applied a logistic regression model to predict the probability of identifying the correct criminal based on education level, training, age range, and analyst type. While the model achieved high accuracy (AUC = 92.26%), none of the predictors showed a significant association with the outcome.

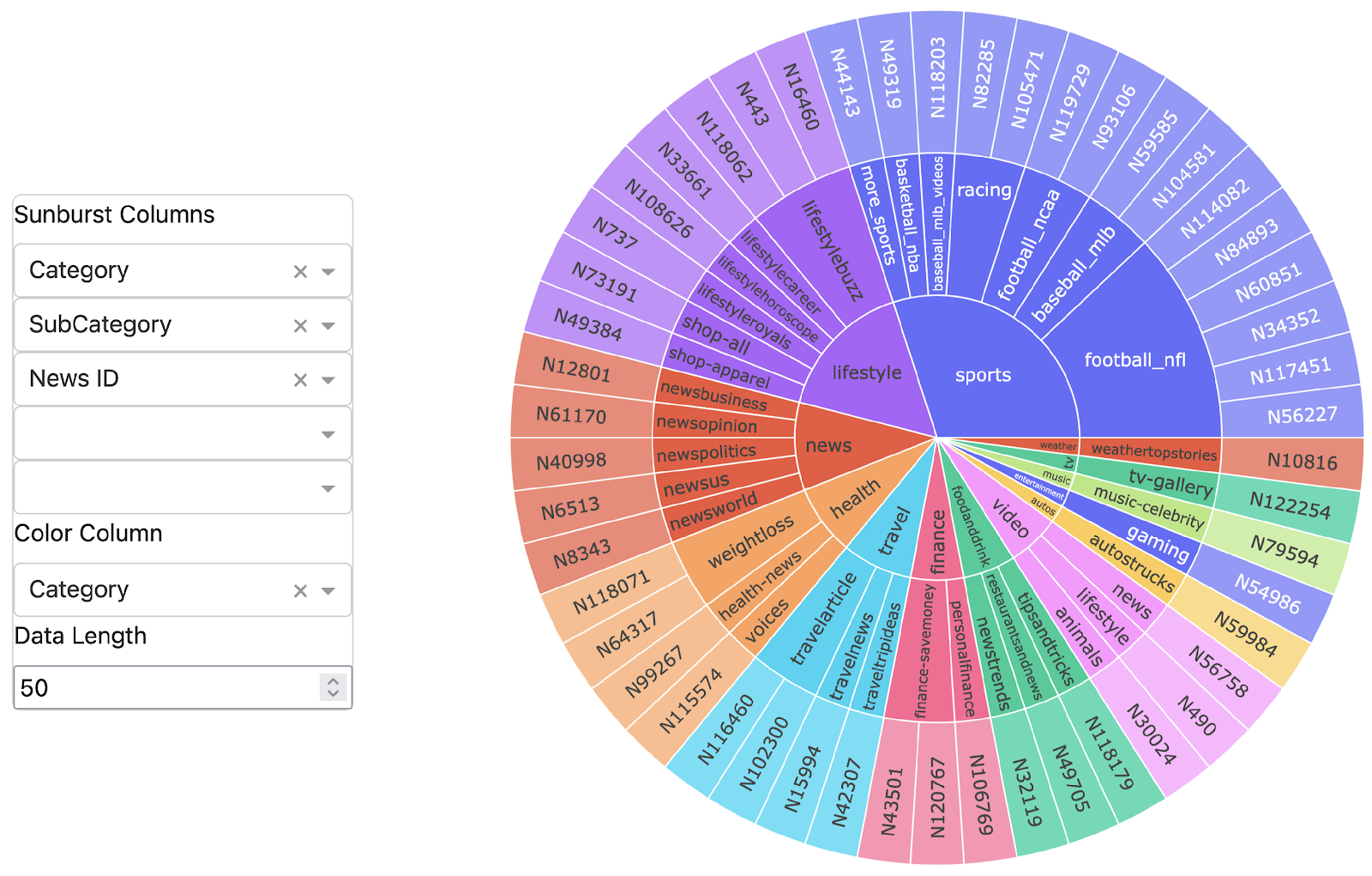

We summarized the workflow by tracking the frequency of each tool and document type used throughout the game, from the start (denoted as “0”) to completion (denoted as “1”). The two plots in the middle-left column and the four plots at the bottom illustrate the frequency of use for various tools and specific document types. Each curve represents an individual analyst, with frequency measured by the number of steps. These plots highlight instances of outlying behavior among analysts. We applied Multivariate Functional Principal Component Analysis to capture key features in tool and document type usage, followed by Partitioning Around Medoids (PAM) clustering to group analysts to identify key strategies, leveraging PAM’s robustness to outliers. The top two figures highlight the primary strategies across four main approaches for tool and document-type usage.

Multivariate Functional Principal Component Analysis (MFPCA) and Partitioning Around Medoids (PAM) are two complementary techniques often used in clustering complex data. MFPCA is applied to functional data to reduce its dimensionality, extracting the most significant features or modes of variation, which helps capture the underlying patterns in the data, such as tool and document usage behaviors. This transformation simplifies the data while retaining its essential structure. PAM, a robust clustering algorithm, is then applied to group the data into clusters based on the reduced features. Unlike k-means, PAM uses actual data points as cluster centroids, making it more resilient to outliers. The resulting clusters represent analysts with similar behaviors or strategies, which can be interpreted as distinct approaches to using tools and documents. By combining MFPCA for dimensionality reduction with PAM for clustering, this approach allows for more meaningful groupings and insights into user behavior, facilitating targeted recommendations and improved decision-making.

For building a recommender, we explored two approaches. The first approach uses a Markov Decision Process to estimate the probability of selecting a current tool and document type based on choices from the two preceding steps, focusing on past scenarios that led to a “save” action. Specifically, at each step for a new analyst, the tool and document type pair is predicted based on the most probable combination, considering the two most recent tools and document types that led to a “save” action within the next 10 steps. The pair with the highest frequency is chosen. The leftmost center panel on the poster shows results for an analyst over several consecutive steps. This method achieved an accuracy of 52.8% (SE = 9.5%) when tested on 9 analysts across 40 consecutive steps. The second approach is a Reinforcement Learning based approach.

We utilized reinforcement learning methods to find out the most valuable steps a user could take when analyzing the evidence, we then clustered the users using the broad ideas/strategies discovered by the reinforcement learning approach. We plan to build on this work to identify the cluster a new analyst could fit into, and later use the reinforcement learning to predict next steps that could lead the user to their goal.

Reinforcement learning is a type of machine learning method, where an AI agent learns from the environment directly. The idea is for the agent to come up with a policy which when applied to the environment would lead the agent in a way which would obtain the highest amount of reward collectively. Rewards are defined by the developer of the agent to help guide the agent. For instance, finding the correct guilty party in the above scenario should generate the highest reward. Applying reinforcement learning to our problem would first require us to define the terms used:

- Agent – The agent is modeled to resemble the analyst trying to navigate through the maze to find the guilty party.

- Reward – The reward is an intermediate positive or negative signal that the agent receives when solving the game. We considered two types of reward: the first one is related to how fast the choice leads to a “save” action – this is indicative of important content concerning evidence. The second type of reward is related to whether an action “assign” is made that is in agreement with the correct identification of the criminal.

- State – The state is the tab the analyst is currently examining; in our case this is the tool.

- Action – The ‘action’ is what the analyst does to navigate. For our game the action is the document type the user is working on and the concrete action that is being performed on it. The comprehensive list of document types includes: are ‘email’,’Network Log’, and ‘Information’. The concrete actions that can be performed on these document types are ‘Reading’, ‘Saving’.

- State-Action Pairs – A state-action pair is the combination of the current state of the agent and what its current action is in that state. For instance, (table, read_Email)and (table, save_NetworkLog) are two state-action pairs.

Monte Carlo Methods:

Once the reinforcement learning paradigm has been defined, Monte Carlo methods can be run on the observed sequence of state action pairs. Monte Carlo method is a model free learning method that lets us find the value of state action pairs using just the experience of an agent, without mapping out the entire Markov decision process. Every state action pair will result in a reward being generated, the reward for assigning a person guilty/innocent if it is in agreement with the final true value would be 3, the reward would be 2 if the assignment is not in agreement with the ground truth value. Occasionally if the users saves a document as important the reward would be 1 as it is believed that the document might be important. For every other state action pair the reward would be -1. Running Monte Carlo on this sampled experience results in finding a series of state action pairs along with their value estimates for that agent. This lets us broadly identify the strategy that is used by the analyst.

Reference chains:

In our approach, we model user behavior as reference chains, defined as sequences of state-action pairs that initiate from a specific starting state-action pair and culminate in an ‘assign’ state-action pair. By applying a Monte Carlo simulation on these user-generated chains, we compute value estimates for each chain, which serve as a ranking metric to evaluate their effectiveness in guiding users to the desired end state. This ranking allows us to identify high-value reference chains, which can then be leveraged to recommend optimal state-action pairs to future users, aiding decision-making processes and improving the overall guidance toward ‘assign’ outcomes.

Developing a Recommender:

The final step in our analysis is to provide recommendations for a new analyst. As the new analyst starts a new exploration of evidence, a vector of all possible states and state-action pairs is formed and this vector is used to calculate the distance from all of the cluster’s centroids. Once there is sufficient evidence that an analyst likely belongs to a cluster, then the history of past analysts are used to guide the user and to make recommendations about how they might optimally solve their analysis task.

This material is based upon work done, in whole or in part, in coordination with the Department of Defense (DoD). Any opinions, findings, conclusions, or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the DoD and/or any agency or entity of the United States Government.

- Categories: