Next-Gen Prompt Engineering: Provably Better

Tim Menzies (timm@ieee.org), Lohith Sowmiyan Panjalingapuram Senthilkumar (lpanjal@ncsu.edu)

NC State University

The rise of Large Language Models (LLMs) has fueled their rapid adoption across diverse fields, especially in software engineering, where their code generation skills and deep technical knowledge shine. While LLMs excel at handling common tasks, their ability to tackle complex, niche problems remains to be determined. Further, design setups must maximize accuracy and efficiency while minimizing computational and financial costs. In this study, we investigated the case study of using Large Language Models for multi-objective optimization problems in software engineering to address the question, “How do LLMs compare to Bayesian methods in solving optimization problems?”

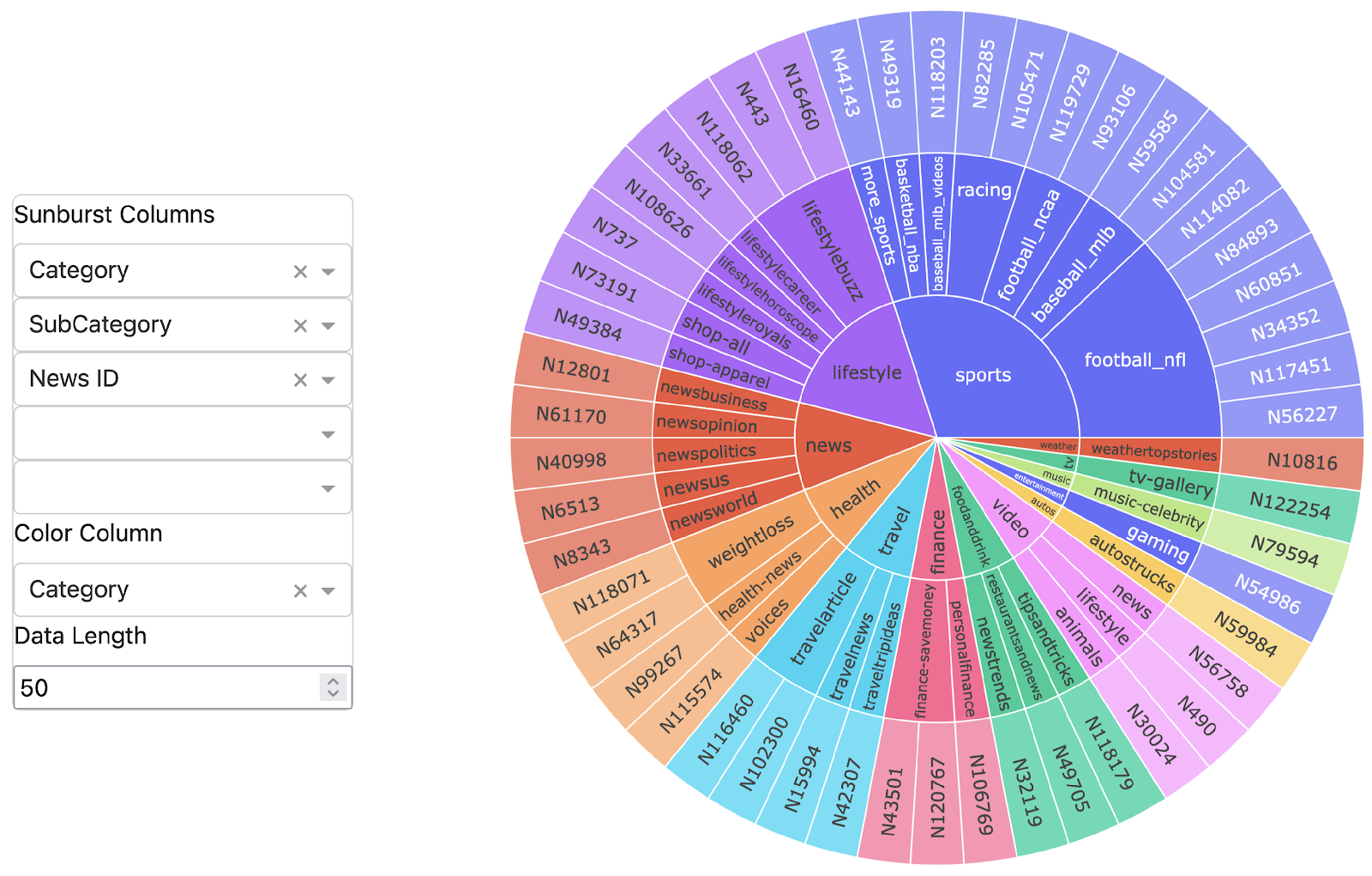

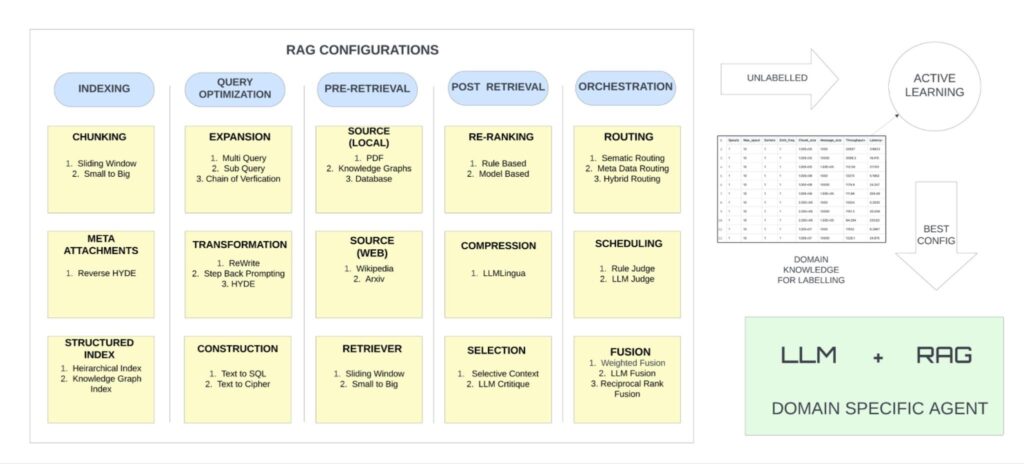

Retrieval-augmented generation (RAG) systems work by augmenting generative capabilities with information retrieval systems. These configurations include choices around retrieval sources, routing mechanisms, indexing strategies, reranking criteria, and balancing parameters like accuracy and query time. However, finding the optimal setup requires substantial experimentation, especially when the target maximizes a model’s accuracy while minimizing latency. This is where EZR, an innovative active learning system for RAG, comes into play. EZR explores RAG configurations under highly constrained budgets, EZR leverages TPE (Tree of Parzen Estimators)-based acquisition functions to iteratively sample the best settings.

Key Questions When Optimizing RAG Systems

The journey to finding the best RAG configuration often revolves around these fundamental questions:

- What are the best sources for routing? Sources define where and how the RAG model retrieves information, whether from internal databases, external APIs, or curated knowledge bases.

- What retrieval strategy works best? Retrieval can rely on techniques ranging from traditional search engines to dense neural retrieval. Understanding which strategy aligns

best with the task can drastically improve results. - How do we index our retrieval? Efficient indexing strategies reduce latency and enhance the relevancy of retrieved information.

- Should we rerank retrieved information? Reranking mechanisms help prioritize the most relevant results, especially in systems where responses must be highly accurate.

- What balance should we strike between accuracy and query time? This trade-off is essential, as high accuracy might come with increased latency, impacting overall efficiency.

Answering these questions effectively requires a flexible framework that adapts and tunes configurations based on real-world needs and constraints. The following diagram highlights many of these configuration choices, which are preprocessed into an unlabeled dataset. This dataset is fed into an active learner to select the next best configuration to label. The selected configuration is tested against the validation dataset and labeled for accuracy and query times. Once the budget is exhausted the resultant configuration would be the optimized RAG.

EZR: A Solution for Configuring RAG Systems in Software Engineering

In the search for optimal configurations, EZR steps in as a powerful active learner. Designed to explore RAG configurations under highly constrained budgets, EZR leverages TPE (Tree of Parzen Estimators)-based acquisition functions to iteratively sample the best settings. It’s a

low-footprint solution, written in under 1,000 lines of code—an impressive simplification compared to traditional optimization systems like Hyperopt, which can have over 10,000 lines and often become difficult to maintain.

Results: EZR Outperforms in Software Engineering Tasks

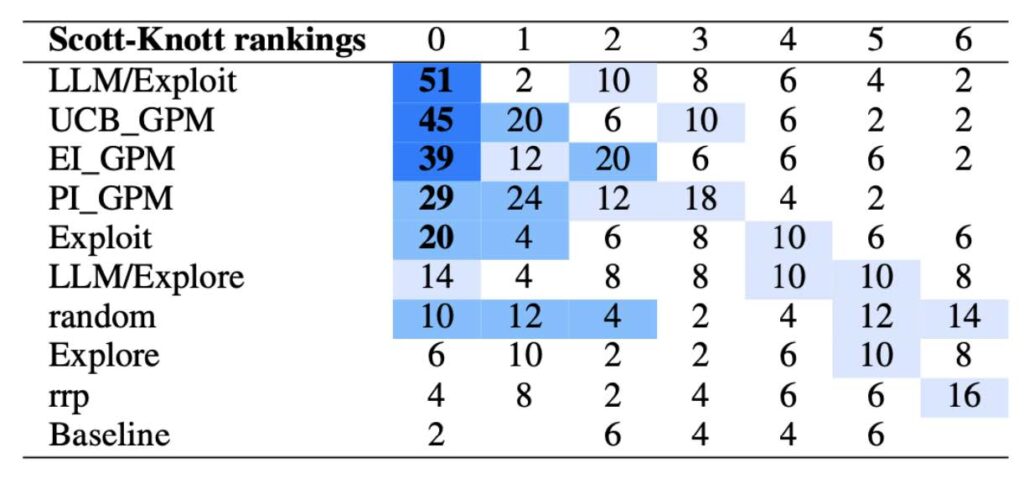

When paired with LLMs and configured for software engineering problems, EZR showed impressive results. It was tested on 45 well-established multi-objective optimization problems from the software engineering domain like SQL database configurations, hyperparameter optimization, network configurations which are largely similar to the RAG optimization problem. We could observe that our methods consistently outperformed classic optimization algorithms.

The combination of EZR’s TPE-driven approach and LLMs’ capabilities produced the best configurations over the datasets.

The EZR and LLM combination, configured to “exploit” the best configurations, achieved better outcomes than Gaussian Process-based Bayesian optimization. This finding underscores the value of EZR’s active learning approach, which adapts to task requirements in a flexible and computationally efficient way.

Specific Research Questions and Our Findings

- RQ1:Can Large Language synthesize better data from random samples? Yes, Large Language Models can synthesize better samples within a dataset.

- RQ2: Can Warm starting help simple Tree-structured Parzen Estimator(TPE) based acquisition functions outperform the complex Gaussian process Models? Yes, warm starting with LLMs makes the simple TPE-based functions outperform complex Gaussian Process Models.

- RQ3: Is the LLM warm start strategy applicable under low-budget scenarios? Yes, Warm-start with LLMs outperforms Bayesian-optimization methods with lesser budgets.

- RQ4: Are Large Language Models capable of synthesizing tabular data across dimensions? No, LLMs still lack the ability to provide similar performances for high-dimensional datasets compared to low and medium dimensions.

Implications for Software Engineering and Beyond

By enabling RAG configurations that don’t rely on large, opaque models, EZR promotes a more interpretable and manageable approach to retrieval-augmented generation. This is particularly valuable for teams looking to avoid over-reliance on black-box LLMs, which can be expensive, computationally demanding, and sometimes hard to interpret. With EZR, software engineers can achieve optimal configurations without needing to depend on fully encapsulated LLM-based solutions, potentially leading to more efficient, cost-effective, and transparent systems.

This material is based upon work done, in whole or in part, in coordination with the Department of Defense (DoD). Any opinions, findings, conclusions, or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the DoD and/or any agency or entity of the United States Government.

- Categories: