Generative AI and Intelligence Analysis

Jacque J., Aaron W., Candice G., Hilary F., Heather B., Aziza J.

THE BIG PICTURE

What Are the Implications of Generative AI on Intelligence Analysis?

Over the past decade, Generative AI technologies across every modality (e.g. images, video, audio, and text) have become increasingly accessible to global audiences. They have also become more effective in producing synthetic media that more closely resembles authentic media. This is in part due to the rapid evolution of new machine learning techniques. The proliferation of commercial and open-source tools using such techniques to manipulate media make it possible for a broad range of actors to generate plausibly convincing media of people and events that never existed nor occurred.

Deepfakes are images or recordings that have been convincingly altered and manipulated to misrepresent someone (Source: Merriam-Webster)

While there are relatively benign use cases for deepfakes, including the creation of human resources videos, there are also nefarious uses that have significant implications for national security. When adversaries create synthetic media or use technologies to manipulate media, analysts must not only be able to identify which media may have been generated or manipulated, but also the purpose. Why was the media created? What is the intended goal or outcome?

Matt Turek, the Deputy Director of DARPA’s Information Innovation Office (I2O) summed it up well in 2019 when he noted that, “determining how media content was created or altered, what reaction it’s trying to achieve, and who was responsible for it could help quickly determine if it should be deemed a serious threat or something more benign.”2

ANALYTIC TRADECRAFT

How Can We Enhance Analysts’ Ability to Identify Generative AI & Manipulated Multimedia?

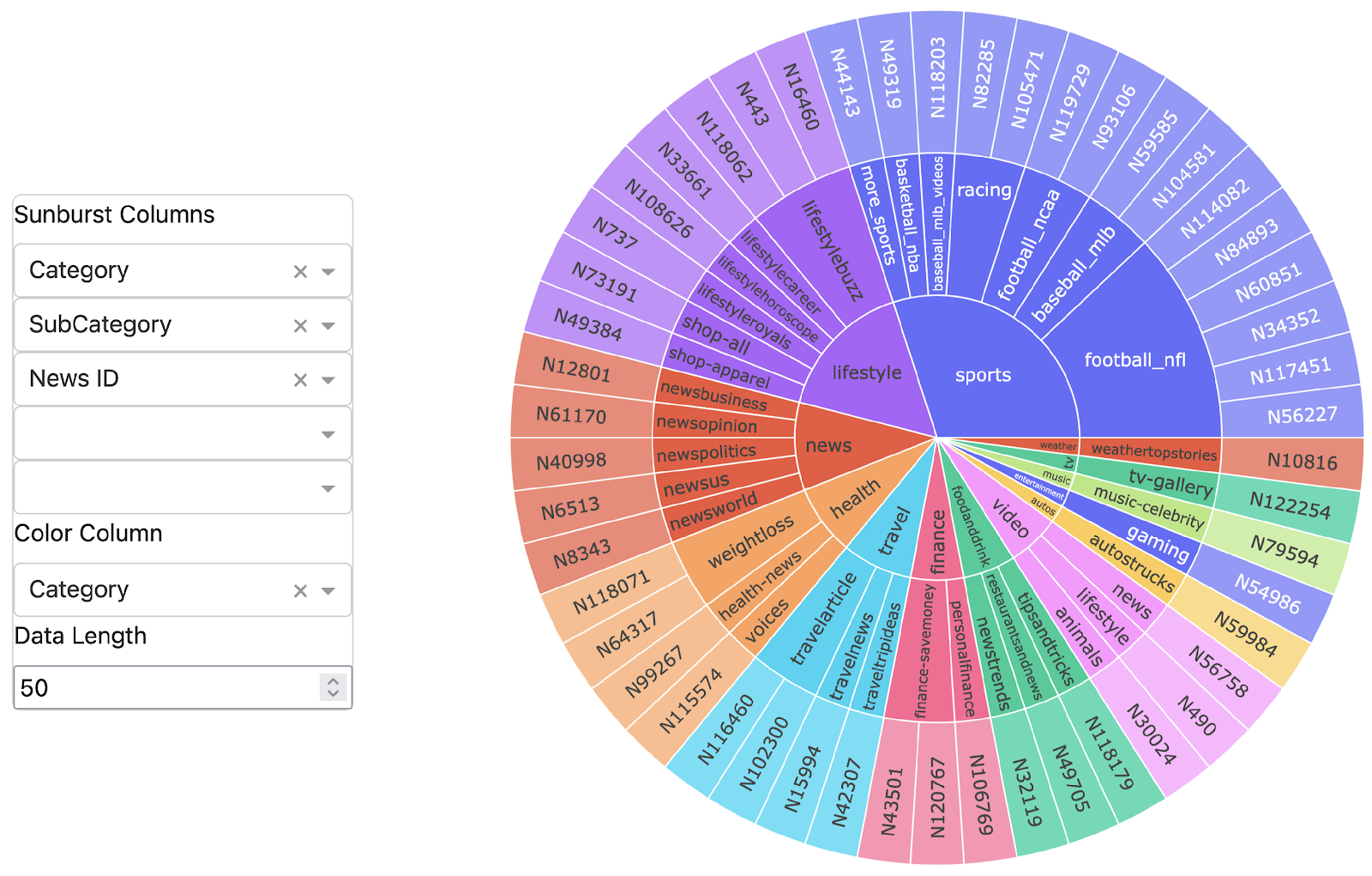

As Generative AI becomes increasingly available and more powerful, intelligence analysts will need support in recognizing and understanding potentially manipulated media content encountered throughout their workflows. Last year, LAS explored research conducted by Rochester Institute of Technology (RIT) that aimed to improve analysts’ understanding of underlying deepfake detection techniques. This work has also helped shape aspects of DARPA’s SemaFor program, which focused on developing innovative technologies for analyzing potentially synthetic media.

SemaFor concluded in September 2024, but transitions of that work remain ongoing.

This year, LAS collaborated with Northwestern University’s Dr. Matt Groh to explore what characteristics analysts should consider when scrutinizing image content. Some Intelligence analysts watched a half-hour video training covering characteristics to be on the lookout, and noted afterwards that they were immediately able to apply the training to their work and had a greater ability to discern authentic images from synthetic images. The initial feedback was positive, and led directly to a follow-up study that will be conducted in November 2024.

ANALYTIC INTEGRITY & STANDARDS

How do we ensure analytic integrity when dealing with potentially manipulated media?

The principles of Analytic Integrity and Standards apply to all aspect of intelligence analysis workflows, including when reviewing media content and assessing authenticity. Analysts are responsible for ensuring their analysis meets the Analytic Tradecraft Standards established in Intelligence Community Directive 203, Analytic Standards3. Analysts must determine the reliability and credibility of the source, whether there is any reason to doubt the authenticity of the media, consider plausible alternatives for the use of generated or manipulated media, determine the relevance of the media, and more. If the media appears to be synthetic or manipulated, then analysts must know how to handle such media appropriately. If the media appears to be authentic but for the purpose of misinformation or disinformation, then analysts should also know how to convey that supposed nefarious intent. Intelligence reports should appropriately convey the authenticity or inauthenticity of media as well as its purpose. If there is uncertainty, then that uncertainty should be appropriately conveyed so that national security customers can make the best decisions possible with the information available to them.

NATIONAL SECURITY MEMORANDUM ON ARTIFICIAL INTELLIGENCE

What are the national security concerns surrounding synthetic media?

In October 2024, the White House published a National Security Memorandum (NSM) on artificial intelligence. This memorandum explores three main themes: (1) advancing the United States’ leadership in artificial intelligence; (2) harnessing artificial intelligence to fulfill national security objectives; and (3) fostering the safety, security, and trustworthiness of artificial intelligence. The third theme includes a requirement for DoD and other federal agencies to

“…include research on interpretability, formal methods, privacy enhancing technologies, techniques to address risks to civil liberties and human rights, human-AI interaction, and/or the socio-technical effects of detecting and labeling synthetic and authentic content (for example, to address the malicious use of AI to generate misleading videos or images, including those of a strategically damaging or non-consensual intimate nature, of political or public figures).”

The research that LAS and Northwestern is doing dovetails nicely with the expectations outlined in the NSM. In order to detect and label synthetic and authentic media, analysts must first understand the characteristics of synthetic and manipulated media.

Endnotes

- Satariano, Adam, and Mozur, Paul. The People Onscreen Are Fake. The Disinformation Is Real, February 2023. New York Times https://www.nytimes.com/2023/02/07/technology/artificial-intelligence-training-deepfake.html

- Uncovering the Who, Why, and How Behind Manipulated Media, September 2019. ODNI https://www.darpa.mil/news-events/2019-09-03a

- Intelligence Community Directive 203, Analytic Standards. First published 2007; updated 2015; technical amendment 2022. ODNI https://www.dni.gov/files/documents/ICD/ICD-203_TA_Analytic_Standards_21_Dec_2022.pdf

This material is based upon work done, in whole or in part, in coordination with the Department of Defense (DoD). Any opinions, findings, conclusions, or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the DoD and/or any agency or entity of the United States Government.

- Categories: