Exploring WebGPU’s Potential for Near Real-Time AI on Edge Devices

LAS: Felecia ML., James S., Jasmin A.

Academic Collaborator: Shaw University (Fall 2024)

Abstract

The Laboratory for Analytic Sciences (LAS), in collaboration with senior design students from Shaw University, is exploring WebGPU as a cutting-edge solution for achieving near real-time AI/ML model processing on edge devices. This research evaluates WebGPU’s potential to enable efficient, browser-based deployment of AI/ML models across diverse device types, advancing the accessibility and performance of machine learning applications on consumer-grade hardware.

Background

In the rapidly evolving field of artificial intelligence, edge devices hold the potential to deliver real-time machine learning capabilities without the need for cloud infrastructure. This research evaluates WebGPU’s compatibility and performance on diverse edge devices, shedding light on its advantages and limitations in supporting AI at the edge.

WebGPU is a cutting-edge API designed to harness the full power of GPUs directly within web applications, making it a promising solution for real-time AI processing on edge devices. Unlike its predecessor, WebGL, WebGPU offers enhanced computational capabilities, especially suited to tasks like image generation and processing required by GANs. This study assesses WebGPU’s performance in delivering near real-time results without specialized libraries, testing it across three popular devices: an AMD Ryzen 7, Apple’s M2 MacBook Pro, and an Intel i5-based PC.

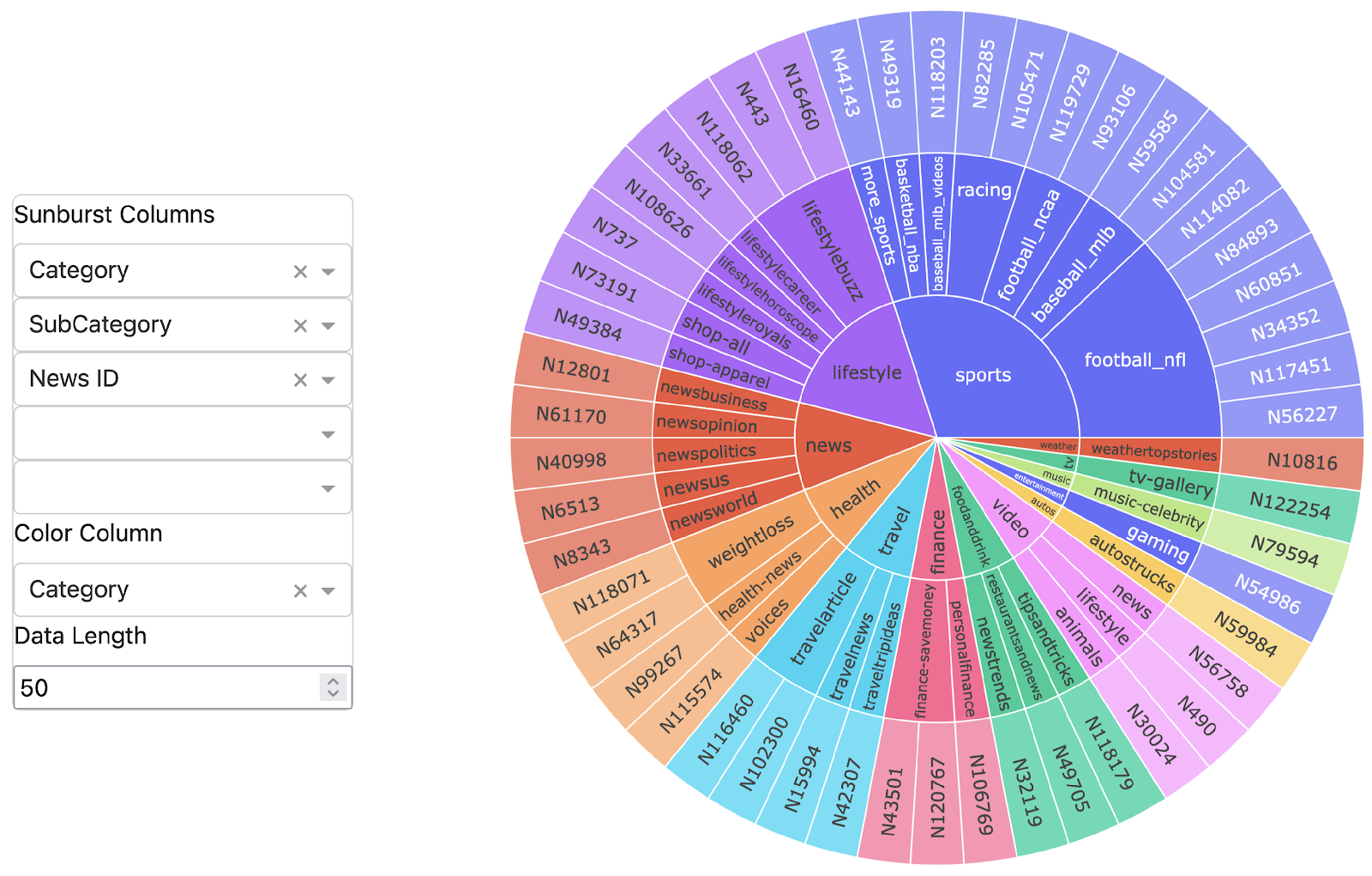

Experiment Setup: Devices and Datasets

To comprehensively evaluate WebGPU, the team tested the API on three different devices, each with a unique architecture and performance profile:

- AMD Ryzen 7 (Edge PC): Known for its high multi-core performance, this device serves as a benchmark for higher-end consumer-grade processing.

- M2 MacBook Pro: Apple’s proprietary M2 chip integrates advanced GPU capabilities and promises efficient performance in compact form.

- Intel i5-based PC: A common mid-range configuration; this device provides insight into WebGPU’s accessibility on more standard hardware.

The research used two datasets to evaluate performance across different image types and complexities:

- Drones Dataset: A relatively complex dataset featuring high-resolution images of drones.

- MNIST Dataset: A simpler dataset featuring 28×28 grayscale images of handwritten digits, which serves as a benchmark for basic AI processing.

Key Findings: Performance and Training Efficiency Across Devices

The study highlighted significant performance improvements in processing and training speeds across devices, with results varying according to device and dataset:

Device Performance

Performance tests conducted across the three devices revealed noticeable differences in CPU and GPU processing times using GANs:

- AMD Ryzen 7: Delivered the fastest GPU processing time at just 4.5 seconds for 1000 images using the GAN discriminator model.

- M2 MacBook Pro: Demonstrated strong GPU efficiency, completing the high-quality drone dataset training in 20 minutes on GPU (30 minutes on CPU). The MNIST dataset showed much faster processing due to simpler image requirements.

- Intel i5-based PC: Exhibited much longer processing times on the CPU and faster times on the GPU against the drone dataset.

Dataset Testing and Image Complexity

Performance across different datasets provided further insights:

- The simpler MNIST dataset benefited significantly from WebGPU’s acceleration, achieving faster processing with minimal resources.

- Higher complexity in the drone dataset resulted in longer training times, yet WebGPU still reduced these times considerably compared to CPU-based processing.

Training Time Comparisons

Training times varied across devices and datasets:

- AMD Ryzen 7: Completed the drone dataset GPU training in 14 minutes, while CPU training took 35 minutes.

- M2 MacBook Pro: Balanced processing efficiency, with GPU training completed in 20 minutes and CPU in 30 minutes.

These results underscore the importance of powerful GPUs for efficient edge processing, as well as the role of image complexity in training duration.

Conclusion: WebGPU for Near Real-Time AI on Edge Devices

WebGPU demonstrated impressive performance, consistently reducing processing and training times across various devices and datasets. While differences in device capability impacted results, the API proved capable of enabling efficient near real-time machine learning on consumer-grade hardware. For applications requiring rapid processing, such as drone image recognition or hand-digit classification, WebGPU offers a promising pathway for edge AI applications.

Lessons Learned and Recommendations

The findings highlight WebGPU’s potential while offering recommendations for maximizing performance:

- Leverage GPU Capabilities: Devices with powerful GPUs, such as the AMD Ryzen 7, yielded the best results, emphasizing the importance of GPU power for real-time AI.

- Optimize Image Complexity: Using smaller, simpler images (e.g., 28×28) can significantly improve processing times, as shown with the MNIST dataset.

- Augment Data and Resources: Increasing training data can improve model accuracy without compromising speed, making WebGPU an attractive option for developers looking to scale machine learning on edge devices.

Limitations and Considerations for Future Work

While promising, WebGPU’s performance varies depending on device capabilities. Larger, more complex models may struggle on certain edge devices or experience increased memory usage, underscoring the importance of optimizing both hardware selection and model architecture. Further research could explore specialized optimizations for larger datasets or more complex models, paving the way for WebGPU to support a broader range of real-time AI applications.

Final Thoughts

WebGPU has the potential to redefine what’s possible for real-time machine learning on edge devices, especially for lightweight AI tasks like image classification and simple GAN applications. As the technology matures and more powerful edge devices become available, WebGPU could play a vital role in enabling accessible and efficient AI at the edge, opening up new possibilities for developers and industries alike.

About Shaw University

Shaw University, a prominent Historically Black College University (HBCU) located in Raleigh, North Carolina, is recognized for its commitment to fostering educational excellence, particularly in STEM and cybersecurity fields. Shaw University is pursuing the Center of Academic Excellence in Cyber Operations (CAE-CO) designation as part of its ongoing mission to enhance academic and professional opportunities for students. This prestigious designation highlights institutions excelling in cybersecurity education, training, and research. Shaw is actively working to become North Carolina’s only institution with the CAE-CO designation, positioning itself as a leader in cybersecurity education and preparing graduates for impactful careers in a field of critical national importance.

In 2023, the LAS supported the establishment of a Minority Serving Institution (MSI) Cooperative Research and Development Agreement (CRADA) with Shaw University, paving the way for expanded partnerships with the Department of Defense (DoD) in cybersecurity. Through the MSI CRADA, the LAS began its partnership with Shaw in the Fall of 2024 on the senior design project, Exploring the Potential of WebGPU for Near Real-Time Edge Devices.

This material is based upon work done, in whole or in part, in coordination with the Department of Defense (DoD). Any opinions, findings, conclusions, or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the DoD and/or any agency or entity of the United States Government.

- Categories: