Conformal prediction for knowledge graphs

Matthew Singer, Karl Pazdernik, and Srijan Sengupta

North Carolina State University

Introduction

In a world overflowing with data, knowledge graphs have emerged as powerful tools for organizing and retrieving meaningful information. A knowledge graph (KG) is a graph-based representation of information about various entities (such as individuals, organizations, locations, or concepts) and their relationships. The transformation of unstructured language data into a structured KG facilitates downstream tasks like information retrieval, association mining, question answering, and machine reasoning. A celebrated example is the Google Knowledge Graph of 800 billion facts on 8 billion entities, from which Google serves relevant information in an infobox in response to a search.

While KGs are invaluable for decision-making, their results inherently carry statistical uncertainty since no specific model output can be assumed to be fully accurate. The opaque, black-box nature of current models further complicates the reliable quantification of this uncertainty. Most black-box models cannot inform the user how they arrive at any given answer, making it difficult for end users to deteremine when (and to what extent) they should rely on the answer. Accepting biased or inaccurate recommendations can lead to suboptimal decisions, with particularly high stakes in critical decision-making scenarios. Thus, effective human-machine collaboration in this setting is hampered by a lack of trust in the algorithm’s results.

The goal of this project is to address this gap by developing a statistical framework to provide prediction sets for KGs. Given a certain target coverage (say 99%), this method produces a set of model outcomes such that the correct answer is guaranteed to be included in the set with 99% probability. This is in contrast to the existing approach to KGs where the model produces a single answer whose probability of being correct is typically unknown. By constructing an uncertainty-aware technique for KG construction, our method empowers end-users to make informed and trustworthy decisions pertaining to KGs.

How are knowledge graphs constructed?

Knowledge graphs aim to extract the information in unstructured documents by identifying relationships between pairs of entities. This involves three steps: Named Entity Recognition (NER), Named Entity Disambiguation (NED), and Relationship Extraction (RE).

1. Named Entity Recognition (NER)

NER is the process of identifying words or phrases in text that refer to entities—like names of people, places, organizations, dates, or any specific “named” thing. Consider the following example:

“Messi scored two goals in Argentina’s 3-0 victory over Croatia in the 2022 FIFA World Cup semifinals.”

The NER system would identify:

- “Messi” as a Person

- “Argentina” as Organization

- “Croatia” as Organization

- “2022 FIFA World Cup” as an Event

These recognized entities form the foundation of the knowledge graph by representing specific, identifiable pieces of information that can be further linked. Note that NER does not identify which entity in a knowledge base the area of text is referring to, only that a specific string of words could be linked to an entity in a knowledge base.

2. Named Entity Disambiguation (NED)

NED links recognized entities to specific entries in a knowledge base (e.g., Wikipedia), which is essential for resolving ambiguity. When given an individual named entity (called a surface form) as input, the NED model performs an intermediate candidate generation step to identify a small number of possible Wikipedia articles. The full NED model is then deployed on each candidate to perform the disambiguation and determine which Wikipedia article is most likely. If none of the candidate articles are likely then the model also has the option to return ‘Not linked to Knowledge Base’.

In our example, NED would ensure that:

- “Messi” refers to the footballer Lionel Messi, not any other person with the same name.

- “Argentina” refers to the national football team rather than the country as a geographical entity.

- “Croatia” refers to the Croatian national football team rather than the country.

- “2022 FIFA World Cup” points specifically to the international football event held in Qatar

The NED model takes the word “Argentina” as the input, generates multiple candidates (e.g, the country Argentina, historical geopolitical entities related to Argentina, the government of Argentina, the national football team, etc.) and outputs “Argentina national football team” as the most likely Wikipedia article. Here the word “Argentina”’ is referred to as the surface form of a entity (how it appears in text) and “Argentina national football team” is referred to the underlying form (the specific database entry). NED models use context from surrounding words and phrases, as well as existing entries in a knowledge base. For example, knowing that “Argentina” is in the context of a football match, the NED system would associate it with the Argentinian national team rather than the country itself.

3. Relationship Extraction (RE)

Relationship extraction identifies and categorizes the relationships between entities, which provides structure to the knowledge graph by defining connections between entities. The output from this step consists of all tuples of the form Entity 1 → relationship → Entity 2, for example:

- Lionel Messi → member of → Argentina national football team

- Argentina national football team → defeated → Croatia national football team

- Argentina national football team → participated in → 2022 FIFA World Cup

- Croatia national football team → participated in → 2022 FIFA World Cup

Relationship extraction can be performed with rule-based methods, which define specific patterns to look for in text, or with machine learning techniques that learn common relationships from annotated data. In recent years, transformer-based models have become popular for relationship extraction because they can understand complex sentence structures and context.

How to carry out uncertainty quantification for knowledge graphs?

As we can see from the above example, KG construction involves a complicated series of natural language processing tasks, with the opportunity of mistakes at any stage in the process. Furthermore, given the sequential nature of these tasks, any error made at an earlier step will propagate through the pipeline, making it difficult to identify its root source in the final output.

Conformal Prediction is a highly flexible statistical framework that produces prediction sets (instead of individual predictions) that are guaranteed to contain the correct answer with a certain probability.

The data is split into two partitions: training and calibration. After a model is trained using only the training data, we evaluate how well that model performs on the separate calibration set. Model outputs are then ranked from most likely to least likely (as per the model). We then store how many outputs it took to reach the correct response (the answer index), the model probability of the correct response (local probability), and the sum of all model probabilities needed to get to the correct response (cumulative probability). These three metrics of index, local probability, and cumulative probability represent the three possible non-conformity scores that will be used to generate the prediction sets. The non-conformity score is a function that quantifies the discrepancy between the correct answer and the model-predicted answer. The key is to find a way to generate the smallest prediction sets (model efficiency) while maintaining the desired accuracy (model validity).

From this point onward, the idea of conformal prediction is simple. Let’s imagine that on the calibration dataset, 99% of observations occur within the first three model responses. Then, for any random response in the calibration set, we can see how a prediction set of the first three responses should contain the correct answer with a 99% probability! This same idea can be used to construct a prediction using the 99% quantile of cumulative probabilities or the 1% quantile of local probabilities. Note: we utilize the 99% for cumulative probabilities or model indexes because as the index/cumulative probability increases, the model confidence in the last answer decreases. This is the opposite for the local probability as the model should be less confident the smaller the local probability is.

Illustration

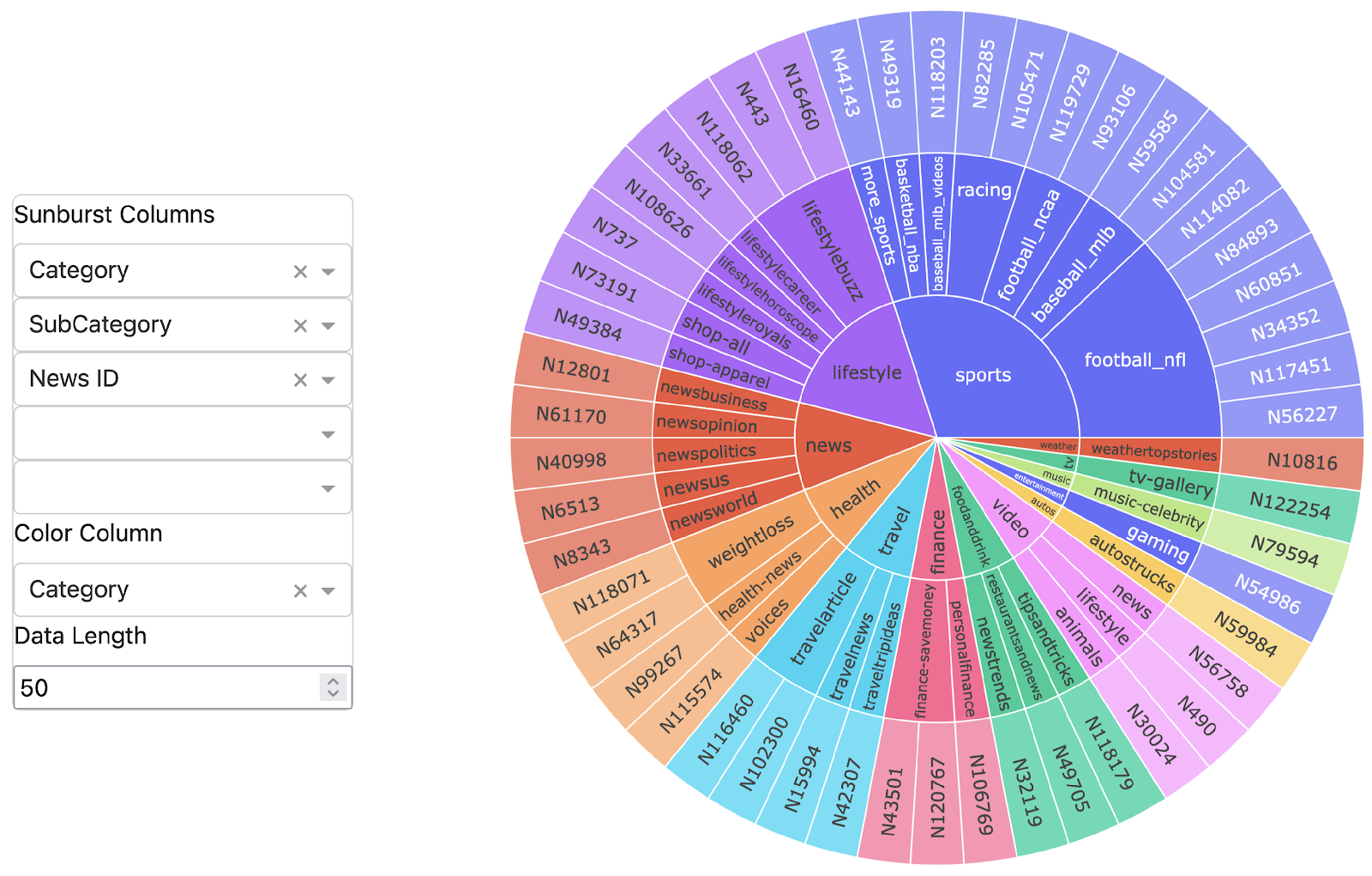

We constructed an interactive streamlit application for users to easily implement our conformal prediction algorithm and produce prediction sets. This application allows users to customize the stepwise confidence levels for NER, NED, and RE. The model will then perform all three steps of KG construction and display the results of each step independently.

As an illustration, suppose we input the sentence from our running example into the app.

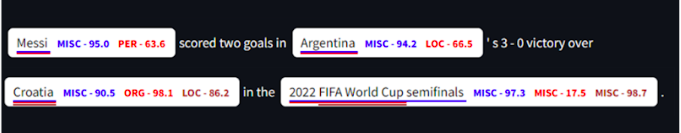

Figure 1 depicts the NER output from the application for a confidence level of 99%. Named entities are highlighted in a white box and a colored underline, enabling overlapping entities to be identified clearly. We used NER ontologies from the CoNLL 2003 benchmark dataset: People, Organizations, Locations, and Miscellaneous. The numbers correspond to the minimum confidence level for that specific output; note that a smaller number means that the model is more confident that the output is correct.

We observe that the NER model, left to itself, would output the named entity “Croatia” as a location, which is wrong because here “Croatia” actually refers to the national football team, an organization entity. Our UQ algorithm produces a 99% prediction set consisting of three outputs, location, miscellaneous, and organization thus including the correct answer. This enables the end user to consider a set of plausible answers – guaranteed to include the correct answer with 99% confidence – and choose the right one, instead of having to trust the single output from the NER model.

This also shows how the UQ framework allows for multiple overlapping NER candidates to be propagated to the NED step. As seen in Table 1, the output of our NED model for these overlapping entities drastically changes, and an incorrect result from this stage in NER would have caused a large impact on the output of our KG model. Notably, because the calibration of each step in the KG pipeline is separate, we may choose to perform NED with a 90% confidence level regardless of our NER’s 99% confidence level. The percentage next to each underlying form in Table 1 is the minimum confidence level of that output.

| Messi | Argentina | Croatia |

| Lionel Messi (58%) | Argentina national football team (83%) | Croatia national football team (79%) |

| Argentina under-23 national football team (90%) | ||

| Argentina under-20 national football team (90%) | ||

| 2022 FIFA World Cup | FIFA World Cup | 2022 FIFA World Cup Semifinals |

| 2022 FIFA World Cup (75%) | FIFA World Cup (85%) | Not linked to Wikipedia (2%) |

| Not Linked to Wikipedia (88%) |

The final step is to generate the prediction sets for RE and merge all results together for entry into the knowledge graph. Table 2 displays the results for RE at a 90% confidence level. Notably at the 90% confidence level we do not identify the fact that Messi is a member of the Argentina national football team nor do we identify the result of the game. If the confidence level were to be increased to above 92% we would see that the prediction set contains (2022 FIFA World Cup semifinals, has part, 3-0 victory over Croatia), therefore correctly retrieving that piece of missing information! Like previous outputs, the ‘Min Confidence’ column depicts the minimum confidence level a given relationship appears in.

| Subject | Relationship | Object | Min Confidence |

| 2022 FIFA World Cup | Participating team | Argentina | 86% |

| 2022 FIFA World Cup | Participating team | Croatia | 83% |

| 2022 FIFA World Cup | Point in time | 2022 | 80% |

| 2022 FIFA World Cup Semifinals | Participating team | Croatia | 79% |

| 2022 FIFA World Cup Semifinals | Participating Team | Argentina | 86% |

| 2022 FIFA World Cup Semifinals | Participant | Croatia | 86% |

| Argentina | Participant in | 2022 FIFA World Cup | 86% |

| Croatia | Participant in | 2022 FIFA World Cup | 86% |

This material is based upon work done, in whole or in part, in coordination with the Department of Defense (DoD). Any opinions, findings, conclusions, or recommendations expressed in this material are those of the author(s) and do not necessarily reflect the views of the DoD and/or any agency or entity of the United States Government.

- Categories: