GUESS 2023: Enabling a Conversation with Data

Mike G., Patti K., Susie B., Sue Mi K., Skip S.

GUESS explores how artificial intelligence and machine learning can help an analyst identify relevant information in poor speech to text transcriptions. GUESS was motivated by outcomes from LAS’s PandaJam study. In 2022 we introduced a prototype that uses a pre-trained sentence embedding model to enable semantic search and topic clustering of speech to text corpora. A custom user interface allows a user to iteratively find relevant information in a large amount of text data in an efficient manner. The user may suggest different search parameters, including natural language questions, and discover the context of relevant topics in a corpora. In this manner, the user Gathers Unstructured Evidence with Semantic Search. In 2023 we continued to build on these efforts. This article describes the key motivations and accomplishments of current work on the GUESS application.

Motivation: Decision Support & Human-Machine Teaming

Intelligence analysis is a type of knowledge work that involves gathering information and making a series of decisions. For analysts, these decisions may include target selection; developing query parameters; selecting relevant information from query results; creating and choosing among associative, predictive, or prescriptive conclusions; or determining how to best aggregate information for dissemination. The LAS PandaJam study sought to understand what decisions analysts seek to make during information retrieval and sensemaking tasks, and how they currently do so. The study showed, among other things, that keyword searches of speech to text transcriptions were insufficient for finding information relevant to specific analytic questions in low quality audio recordings such as the Nixon White House Tapes. Additional barriers to effective decision making and analysis were identified. Poor speech to text transcription not only meant that keyword search was an unreliable method of filtering data, but also made it difficult for analysts to select relevant information from query results and to efficiently form a coherent understanding of the context of the topic of interest.

We believe enabling humans to leverage AI’s capabilities to augment rather than replace human tradecraft will lead to better decision outcomes. The combination of technology and people can be considered as a human-machine team, where each team member contributes as best suited to their abilities in order to attain an outcome better than either could attain independently. “Better” might mean analysis that is more thorough, more efficiently completed, or both. GUESS was initially designed as an information retrieval aid that supported analysts as they made decisions that would help them identify key information in room audio using speech to text transcriptions, possibly of poor quality, and to help choose alternative keywords for a keyword search that would be successful in the speech to text domain. Our 2022 work focused on building the framework for these capabilities. Using a pre-trained, off the shelf sentence transformer model to create vector embeddings of all of the machine transcribed sentences in the Nixon transcript corpus allowed the analyst to provide a question or other natural text input, as well as other search parameters to find relevant text in the corpus. The application returns to the analyst a ranked list based on semantic similarity, as well as clusters of similar sentences in relevant documents. These clusters represent a type of topic modeling that allows the analyst to explore the context of topics of interest in order to find additional information or refine their search strategies.

GUESS also provided an interactive visualization that allows users to understand the spatial concepts underpinning the similarity search and clustering methodologies the application employs.

In order to function effectively as a decision-making team, the analyst and AI must understand each other’s strengths and weaknesses, fostering alignment, and calibrate trust. Alignment addresses whether the AI is actually doing the task that people think it is supposed to do in a way that reflects the user’s goals and values.

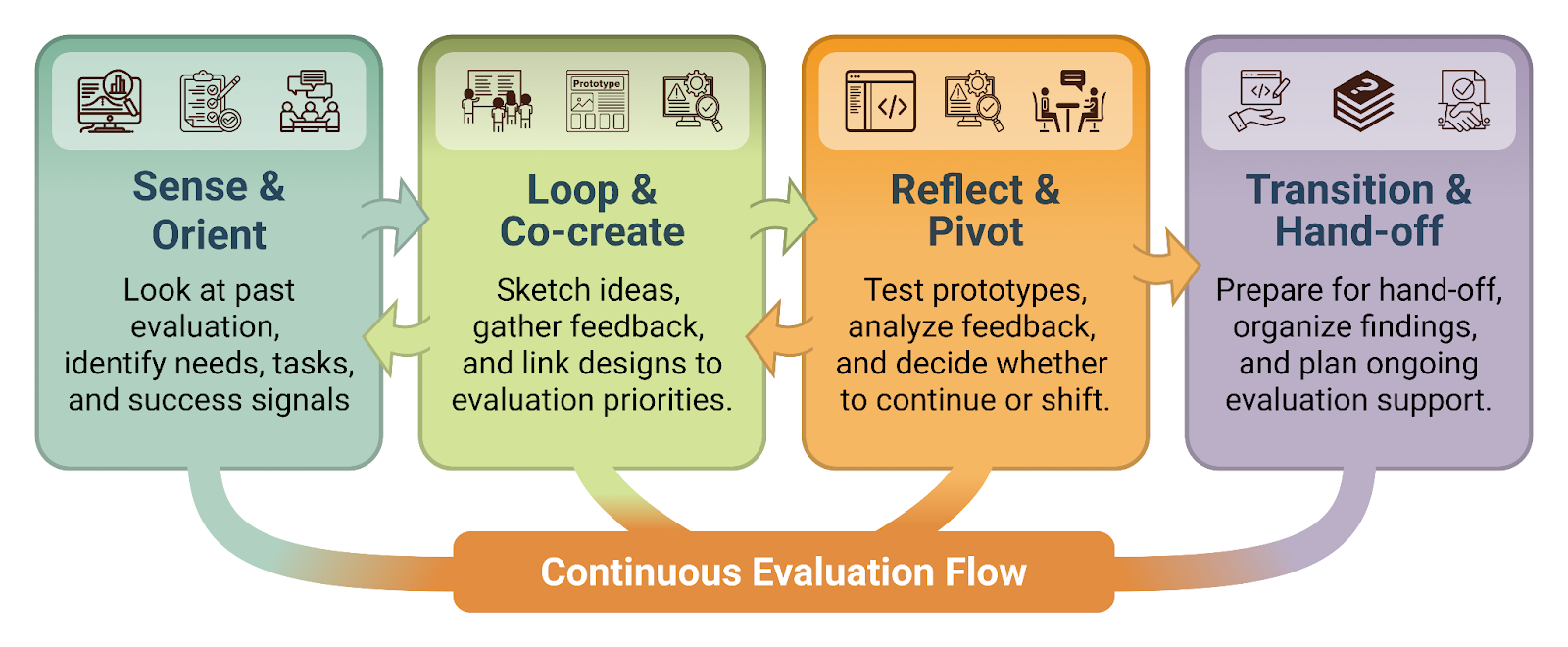

Trust calibration refers to gauging how much to trust the output of an AI and, based on that level of trust, using the output appropriately. Users should never trust the output completely, but should nevertheless be able to find applications where that output is useful. Interactions between the humans, the machine learning algorithms, and the data used to make decisions can be thought of as a conversation. Through this conversation, alignment of purpose occurs and trust is established and calibrated. GUESS takes a user-centric, human in the loop approach to foster an iterative conversation with the data and the underlying ML capabilities. Rather than providing the analyst with an “end prediction” such as a ranked list produced by a recommender, or a label produced by a classifier, GUESS allows analysts to iterate through various ways of analyzing the data, and to seamlessly change their questions and search parameters as they do so, thus simultaneously giving them a better understanding of the data they are investigating and the technical capabilities of the application, ultimately helping them more effectively find key information. This approach fosters alignment and calibrates trust between the user and the machine learning technology and increases the usefulness of the tool. In this manner, GUESS creates a tangible example of effective human machine teaming that can optimize analysts’ decisions and analytical outcomes.

We take a human centric and task driven approach to machine learning applications. We consider that the human may not even initially be certain of the task they are trying to accomplish and can be expected to modify the task based on their observations of the abilities of their AI teammate. By giving analysts new ways to interact with their data, analysts’ decision-making process may evolve. In 2023, we have created additional functionality to give analysts more opportunities to find and make sense of relevant information in speech to text corpora. GUESS now includes the ability to explore topics in the time dimension using interactive topic segmentation and supporting visualizations.

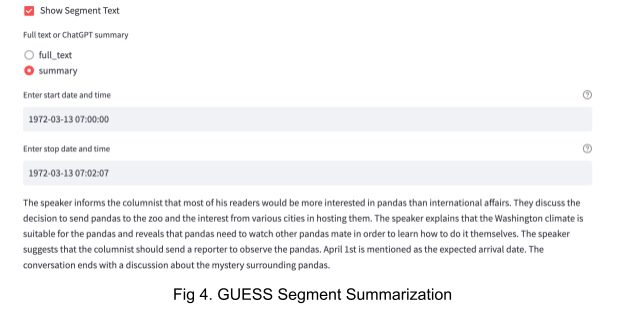

We also explore the use of Large Language Models and demonstrate how current frontier AI capabilities may support analytic workflows and how we may help analysts understand and appropriately gauge trust in these capabilities. We use the OpenAI ChaptGPT API to summarize segments of transcripts as well as to perform question and answering on the entire corpus via retrieval augmented generation (RAG). RAG methods first query a trusted data source and then prompt a LLM with the results to summarize or answer a question in fluent natural language.

Technical Considerations

For those building AI capabilities, GUESS provides an example of a modular AI system that includes multiple machine learning capabilities and services, vector stores, a custom user interface, and humans to effectively augment analytic decision making. GUESS is built using python code running as microservices in docker containers. It uses open source packages for machine learning functionality, including the transformer library from huggingface.co and mlserver from Seldon.ai. We use pre-trained, open source language models for sentence embeddings, one of which supports over 50 languages. The open source python package Streamlit is used for the creation and serving of a user interface. We use both an in-memory sqlite database as well as elasticsearch, as the latter provides a scalable vector store capability along with approximate k-nearest neighbor search. Interactive visualizations are created with the plotly python library. Abstractive summarization and question answering with retrieval augmented generation use the public API for OpenAI’s GPT3.5-turbo large language model. But none of it works without a human in the loop.

Future Work

Future directions for GUESS will include additional user studies evaluating the effectiveness of user in the loop processes for analytic decision making. We will continue to apply GUESS to additional language corpora and other text domains beyond speech to text. We will also continue to make the application more accessible and more reliable for additional testers both low side and high side for mission evaluation.To support this testing, we will design feedback mechanisms in the user interface to capture user engagement and usage patterns. We want to formalize evidence supporting the concepts of alignment and trust calibration. In addition, there are several open technical research areas we intend to pursue, including improving and testing topic segmentation methods and evaluating and fine-tuning text embedding capabilities.

- Categories: