Building a More Explainable Analyst-Centered Deepfake Detection Tool

Saniat J. Sohrawardi, Y. Kelly Wu, Matthew Wright (Rochester Institute of Technology)

John L., Jacque J., Aaron W., Candice Gerstner

Imagine an international summit happening in the near future. World leaders gather, tensions simmering beneath the surface. During a confidential meeting, a video emerges online. It allegedly captures a leader in the meetings making a surprising and fiery statement about another country that could strain relations between them. The footage spreads across social media, triggering outrage and calling for action.

Enter intelligence analysts, tasked with verifying the video’s authenticity. They scrutinize every pixel, cross-reference metadata, and trace its origin. Is this video genuine, or a meticulously crafted deepfake? Alliances, peace, and lives hang in the balance.

In an era where deepfakes threaten to destabilize nations and compromise global security, the role of intelligence analysts has never been more critical. In a high-stakes scenario, where the course of international relations hangs in the balance, the need for a robust deepfake detection tool becomes paramount. Our mission? To create a tool that not only detects deepfakes but caters specifically to the needs of intelligence analysts, ensuring both reliability and explainability.

Navigating the Labyrinth of Deepfakes

As the digital age propels us into an era where information can be manipulated with unprecedented sophistication, the pervasive threat of deepfakes looms large. These synthetic creations, borne out of deep learning algorithms, have evolved from mere novelties to potential instruments of misinformation and chaos. The Russia-Ukraine conflict of 2022 bore witness to manipulated videos circulating widely, showcasing the potential of deepfakes to alter the geopolitical landscape.

Recognizing the gravity of this threat, our project asks: How can we equip our nation’s intelligence analysts with tools that not only keep pace with the evolving deepfake technology but also align with their unique needs and expertise?

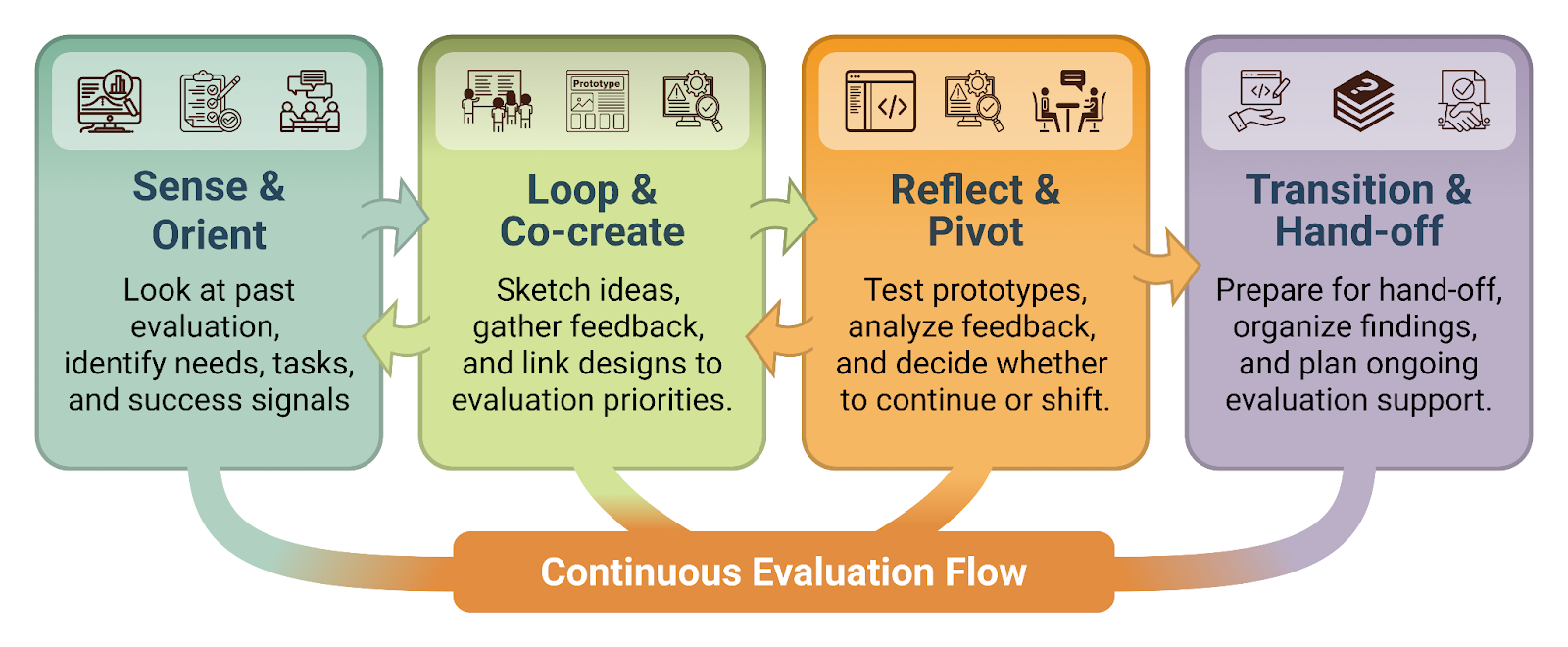

Our project employs a mixed-methods approach, incorporating interviews, surveys, and prototyping to understand the requirements of intelligence analysts. We are currently working to integrate our findings into the tool’s design, including both new features and extensible design to address future needs.

Interviews & Findings: Unveiling Analysts’ Perspectives

Our research explored the daily workings of intelligence analysts, engaging in two rounds of interviews with a diverse group of 21 participants. This cohort included both active analysts and those with prior analytical experience, with expertise in various media formats—images, videos, texts, and audio.

Analyst Workflow and Challenges

A recurring theme in these interviews was the challenge of work efficiency. Analysts expressed frustration with navigating through multiple tools with a lack of standardized metrics and emphasized the need for a seamless integration of deepfake detection into their existing workflow. Moreover, analysts often find themselves needing to compile reports to communicate findings to various audiences, ranging from fellow analysts to decision-makers. This is a tedious task that will benefit from clear reasoning for their analysis choices and explanations for the results they obtain.

Deepfake Familiarity and Concerns

In terms of deepfakes, participants vary in their level of familiarity. Most grasp the synthetic and imitating aspects of it, with some emphasizing the role of intention. Among all modalities, video content was the analysts’ primary concern. This also has to do with the accessibility of the content, as one key insight from the interviews was the acknowledgment of the rapid dissemination of misinformation in today’s digital age. Participants emphasized the consequential threats of deepfakes on both personal and societal levels. This acknowledgment underscored the critical role analysts play in countering the negative impacts of manipulated media.

Desired Features for Deepfake Detection Tools

In exploring the features desired in a deepfake detection tool, participants highlighted several key elements that would enhance their analytical capabilities. In addition to our initial goal of achieving a clear display of detection results and corresponding explanations, participants also emphasized the possibility of incorporating traditional analysis methods, emphasizing adaptability in our tool’s design. Their interest extended to new features, including content quality warnings, integrated notes for future collaboration tasks, and detailed descriptions of detection methods used. As a previously mentioned concern, participants desired consistency within metrics, with mentions of ICD203 analytic standards as an example. Batch processing emerged as a feature that came up often, where participants mentioned that they would want to triage groups of content to identify higher-priority items. Lastly, many participants stressed the importance of report-generation capabilities to help them aggregate the various findings, accompanied by effective proofs from the tool that various types of stakeholders could understand.

Reception of the Original DeFake Tool and Explainability

Participants found the original design of the DeFake tool’s overall flow to be intuitive and comprehensive. However, they also highlighted areas for improvement that could better align with their workflow, as summarized in the Desired Features section. We observed that participants tend to focus on individual evaluation outcomes first and then move to the overall fakeness score. While the score was generally understood, participants desired clearer insight into its derivation and calculation. Participants also expressed an intent to include evidence from the tool in their reports to communicate findings effectively with decision-makers. However, when it came to explainability in deepfake detection tools, opinions varied, but a consistent preference surfaced for detailed textual explanations. This insight prompted our team to recognize the need for adaptability in the level of detail provided, catering to users with varying levels of expertise. The importance of localization and the ability to compare results also stood out as essential for most participants.

DeFake Tool: Bridging Technology and Analysts’ Needs

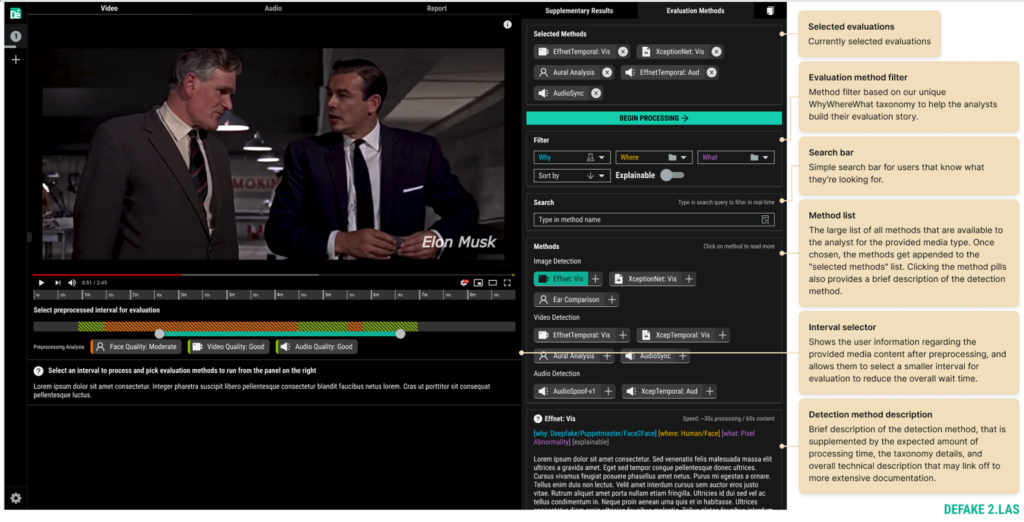

Building upon the insights gained from interviews, the DeFake tool underwent numerous modifications, shifting it from its original focus on journalists to a tool designed specifically for intelligence analysts. The redesigned interface now boasts a large detection workbench, a command center of sorts, where analysts can view results from various evaluation methods within the analysis interval of the media content.

The workbench is divided into two main sections: the primary results area, which attracts the most attention and includes all crucial interactive evaluation outcomes and notes, and the multipurpose sidebar. The latter facilitates access to secondary insights and application control.

Within the primary results area, the media under assessment is displayed at the top for visibility, followed by modular evaluation blocks. These blocks feature evaluation results aligned with the video timeline, promoting a more intuitive grasp of the outcomes. We also include explainability sub-blocks unique to the evaluation method, notes, and various informational and warning labels. The tool’s method-specific explainability options are tailored to users with varying levels of forensic expertise, ensuring meaningful insights for both novices and experts.

The multipurpose sidebar, also modular in nature, comprises blocks with comprehensive information on the processed content, such as metadata, overall fakeness, top fake faces, and an estimation of the deepfake manipulation method used. In addition to conventional metadata extraction, the DeFake tool incorporates provenance technology, extracting Content Credentials (CR) proposed by the Content Provenance and Authenticity (C2PA). These credentials not only legitimize content but also enhance the tool’s overall reliability.

Usability and explainability were focal points in the redesign. On a separate sidebar tab, the tool offers a diverse range of evaluation methods for analysts to process content. The interface allows users to explore current and future methods, understand their workings, check expected processing time, add them to the evaluation queue, and display results in the primary results area. Given the potential abundance of methods, old and new, analysts can filter through them using our Digital Media Forensics Taxonomy. We delve into the taxonomy’s thought process and design later in the article. The taxonomy guides digital content analysis, connecting the “why,” “where,” and “what” aspects, aligning seamlessly with analysts’ workflow and providing a structured understanding of their choices. This approach eases the burden on analysts, allowing them to leverage their expertise while efficiently handling deepfake content.

Before applying various analysis methods to the content, the preprocessing block evaluates its quality. It provides users with essential information about the potential effectiveness of different evaluation methods and allows them to opt for a smaller video snippet for assessment. Given the resource-intensive nature of forensic AI tools, this choice contributes to enhanced efficiency. Thanks to the modular design, users can select a content snippet at any stage of the evaluation process.

Identifying a major pain point in the analyst’s workflow—various disconnected tools hindering findings collection—we introduced a tabbed and modular interface. This interface offers multiple workspaces for each analyzed content piece, providing an overview of whether the content was flagged. Although our current design doesn’t fully resolve the issue of multiple toolsets, its modular nature allows for the future integration of diverse evaluation methods. This sets the stage for a tool that incorporates the maximum resources analysts need to thoroughly analyze multimedia content.

In essence, the updated DeFake tool serves as a link between advancing detection technology and the practical requirements of intelligence analysts.

Digital Media Forensic Taxonomy: Explainable and Consistent Terminology

The Digital Media Forensic Taxonomy is a structured framework designed to enhance consistency in terminology within the ever-evolving field of media forensics. This taxonomy not only serves as a resource for intelligence analysts but also acts as the backbone for refining the DeFake tool’s filtering capabilities.

The taxonomy addresses the fundamental question of “why” users analyze digital media content, connecting to “where” they should focus their attention, and ultimately guiding them to “what” artifacts they should look for. This structure provides a solid foundation that can organically grow to meet evolving needs while maintaining a consistent method for tagging and filtering.

The “Why” section of the taxonomy delves into the motives behind analyzing digital media content. It covers a broad spectrum of manipulation types, including deep-learning-based manipulations like deepfakes and manual manipulations achieved through image editing software. Analysts can use this section as a reference point to assess unfamiliar or suspicious media content, categorizing it for further investigation.

The “Where” section breaks down the areas that analysts should concentrate on when searching for potential evidence of manipulation. This segment can be linked to the “What” section, which offers a structured approach to forensic techniques. These techniques are categorized into file structure, spatial methods, and temporal methods. While providing guidance on specific artifacts or information to focus on, the “What” section gives analysts flexibility to choose methods that align with their needs.

To showcase the growth potential of our taxonomy, we build out the “Why” collection to specifically address the current technological state of deepfakes, including relevant aspects of potentially fully synthetic content.

While primarily centered on visual media, the taxonomy acknowledges the importance of auditory and text-based elements in forensic work, hinting at the need for further investigation to incorporate these elements into the taxonomy for a more comprehensive approach.

The Digital Media Forensic Taxonomy is an attempt to ensure consistency and clarity in the language of media forensics. By addressing the challenges faced by intelligence analysts, this taxonomy acts as a bridge between analysts and developers and a guide for clearer communication between various stakeholders of forensic reports.

Deepfake technology presents a formidable challenge, but through collaboration and innovation, we can equip intelligence analysts with the tools needed to effectively overcome its intricacies. The journey to building an explainable deepfake detection tool is ongoing; we are actively developing an explainable deepfake detection tool to meet analysts’ evolving needs and ensure assessment reliability.

- Categories: